Lesson 7: Chi-square Tests and ANOVA

Lesson 7: Chi-square Tests and ANOVA

Overview

Overview

In this lesson, you will learn two more means of conducting statistical inference: Chi-Square Tests and ANOVA tests. These tests will allow you to compare multiple groups within a dataset, but differ on the make-up of those gropus. Additionally, you will learn how to write hypotheses for these tests and use p-values to make conclusions.

Learning Outcomes

By the end of this lesson, you should be able to:

-

identify when to use Chi-square tests

-

employ Chi-square tests in Python

-

recognize the role of ANOVA in experimental design

-

interpret the output of an ANOVA test in Python

Lesson Roadmap

| Type | Assignment | Location |

|---|---|---|

| To Read | Lock et. al. 7.1 (7.2 Bonus), 8.1 (8.2 Bonus), and 9.1 | Textbook |

| To Do |

Complete Homework: H09: Chi-square ANOVA Take Quiz 7 |

Canvas |

Questions?

If you prefer to use email:

If you have any questions, please send a message through Canvas. We will check daily to respond. If your question is one that is relevant to the entire class, we may respond to the entire class rather than individually.

If you prefer to use the discussion forums:

If you have questions, please feel free to post them to the General Questions and Discussion forum in Canvas. While you are there, feel free to post your own responses if you, too, are able to help a classmate.

Introduction to Chi-Square Tests

Introduction to Chi-Square Tests

Read It: Chi-Square Tests

Read It: Chi-Square Tests

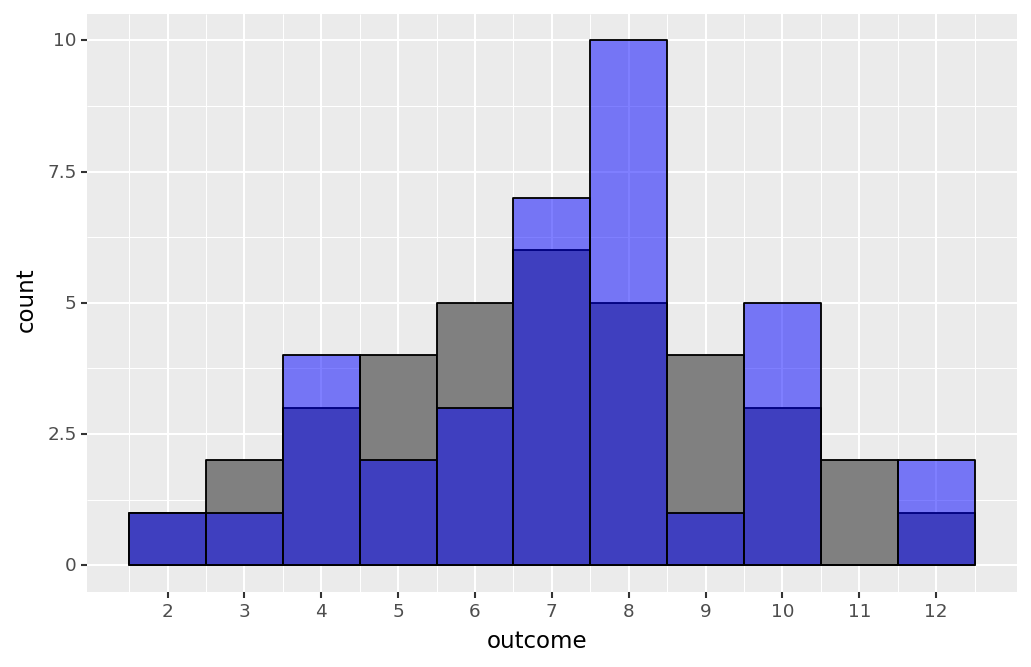

Imagine you have some data that is separated into categories. You are interested in determining if the frequencies (counts) in each category in your sample match those of the population. To answer this question, you can conduct a chi-square test! Here, "chi" is the Greek letter χ, which is pronounced "kai". For example, in the figure below, we show two histograms--the gray histogram is the theoretical rolls of two dice, while the blue is a sample of rolls. We could make some qualitative statements that compare the two plots. For instance, our sample includes more rolls of eight than the population, but the same amount of rolls of two. Often, however, we want to develop a more robust comparison than visual estimations. For this, we can conduct a chi-square test to confirm if the counts of each possible outcome are statistically significantly similar between the true population and the sample dataset.

To conduct a chi-square test, we first need to determine the hypotheses we will be testing. These hypotheses will look slightly different than those you learned in Lesson 5. As discussed in Lesson 5, the null hypothesis always uses equals signs. However, unlike the hypotheses in Lesson 5, for chi-square tests, the null hypothesis contains multiple proportions that are equal to the population proportions. Further, the alternative hypothesis is often written completely in words (i.e., no mathematical symbols). Generally, you can write something along the lines of "at least one proportion above is not as specified". An example of applying these hypotheses to the dice roll example is shown below.

Example of Hypotheses for Chi-Square Tests

To conduct a chi-square test, you need to implement the following steps:

- Define the hypotheses

- Compute the expected count for each category: where is the number of observations and is the population proportion

- Compute the sample chi-square statistic:

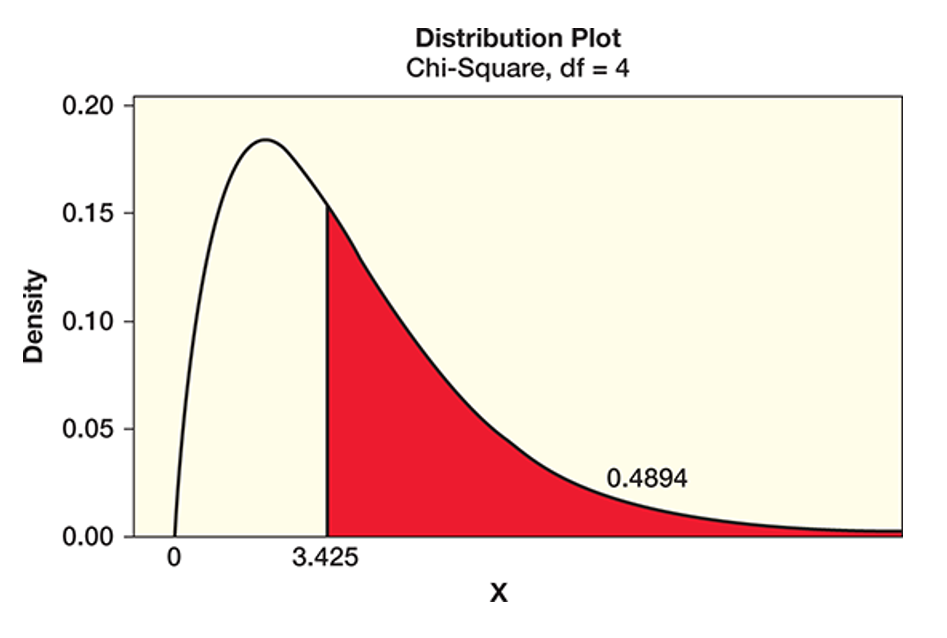

- Find the p-value based on the idealized chi-square distribution, shown in the figure below

- Make a conclusion based on the p-value

Generally, the chi-square distribution is find the p-value and make a subsequent conclusion. The chi-square distribution is interesting because it is always positive and is controlled by a single parameter, the "degrees of freedom", which we discussed in Lesson 6. Below, we show the distribution with 4 degrees of freedom. To calculate the p-value, we find the location of the test statistic and calculate the area under the curve and to the right of the sample statistic (this area is filled with red in the plot below). Generally, we will calculate the degrees of freedom as where is the number of categories in our dataset.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

Chi-Square Test: Randomization Procedure

Chi-Square Test: Randomization Procedure

Read It: Randomization Procedure

Read It: Randomization Procedure

Often, we will not have a large enough sample to conduct the chi-square test following the steps laid out in the previous discussion. In these cases, it is possible to conduct a randomization procedure to simulate additional data from a single sample and conduct a chi-square test. Below, we will demonstrate this process with a deck of cards. Our hypotheses are:

We will start with some data collection in the first video. Then, in the second video, we will demonstrate the randomization procedure. Finally, the third video will show the p-value calculation.

Watch It: Video - Data Collection (4:59 minutes)

Watch It: Video - Data Collection (4:59 minutes)

Watch It: Video - Randomization (8:40 minutes)

Watch It: Video - Randomization (8:40 minutes)

Watch It: Video - P-Value (2:39 minutes)

Watch It: Video - P-Value (2:39 minutes)

Try It: DataCamp - Apply Your Coding Skills

Try It: DataCamp - Apply Your Coding Skills

Use the card drawing simulator linked here [2]to draw cards for your own sample. Then, apply the randomization procedure for the chi-square test.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

Chi-Square Test: Visualization

Chi-Square Test: Visualization

Read It: Visualizing Chi-Square Distributions

Read It: Visualizing Chi-Square Distributions

When creating visualizations for chi-square tests, you need to provide three key pieces of information: (1) the randomization distirbution of chi-square statistics, (2) the sample chi-square statistic, and (3) the idealized chi-square distribution. In the video below, we walk you through adding each of these parts to a single ggplot graph.

Watch It: Video - Visualization (7:06 minutes)

Watch It: Video - Visualization (7:06 minutes)

Try It: GOOGLE COLAB

Try It: GOOGLE COLAB

- Click the Google Colab file used in the video here [3].

- Go to the Colab file and click "File" then "Save a copy in Drive", this will create a new Colab file that you can edit in your own Google Drive account.

- Once you have it saved in your Drive, try to edit the follwoing code to create a visualization of the data you collected in the previous DataCamp exercise.

Note: You must be logged into your PSU Google Workspace in order to access the file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | # Copy your data from the previosu DataCamp exercisetable = pd.DataFrame({'Suit' : ['Diamonds', 'Hearts', 'Clubs', 'Spades'], 'Count' : [0,0,0,0]})# Rerun randomization procedure code# Create Visualizationdeg_f = ... chisq_df['x_pdf'] = ...chisq_df['y_pdf'] = ...(ggplot(chisq_df) + geom_histogram(...) + geom_vline(...) + geom_line(...)) |

Once you have implemented this code on your own, come back to this page to test your knowledge.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

Chi-Square Test: One Line Test

Chi-Square Test: One Line Test

Read It: Chi-Square One Line Test

Read It: Chi-Square One Line Test

Similar to the one-line hypothesis tests discussed in Lesson 6, there is also a one-line test for chi-square tests. This test uses the stats.chisquare command in the scipy.stats library, the documentation for the command can be found here [4]. In order for this test to work, you need to provide f_obs (the observed count) and f_exp (the expected count). Below we demonstrate how to implement this code.

Watch It: Video - Chi-Square One-Line Test (2:46 minutes)

Watch It: Video - Chi-Square One-Line Test (2:46 minutes)

Try It: GOOGLE COLAB

Try It: GOOGLE COLAB

- Click the Google Colab file used in the video here [5].

- Go to the Colab file and click "File" then "Save a copy in Drive", this will create a new Colab file that you can edit in your own Google Drive account.

- Once you have it saved in your Drive, try to edit the following code to implement the one-line test using the card data you collected earlier in the lesson:

Note: You must be logged into your PSU Google Workspace in order to access the file.

1 2 3 4 5 6 7 8 9 10 11 12 | # Copy your data from the previosu DataCamp exercisetable = pd.DataFrame({'Suit' : ['Diamonds', 'Hearts', 'Clubs', 'Spades'], 'Count' : [0,0,0,0]})# find expected countsn = ...p = ...count_exp = ...# implement one-line coderesults = stats.chisquare(f_obs = ..., f_exp = ...)print('p-value: ', ...) |

Once you have implemented this code on your own, come back to this page to test your knowledge.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

Another Example of Chi-Square Tests

Another Example of Chi-Square Tests

Read It: Another Example of a Chi-Square Test

Read It: Another Example of a Chi-Square Test

So far in this lesson, we have been working with an example where all the expected proportions were equal. However, often the proportions we were work with are not expected to be equal. This situation increases the complexity of our chi-square test, since we need to make sure we are ordering things correctly within our expected counts array. Below, we demonstrate how to implement a chi-square test with unequal groups.

Watch It: Video - Chi-Square Test Part 2 (9:02 minutes)

Watch It: Video - Chi-Square Test Part 2 (9:02 minutes)

Try It: GOOGLE COLAB

Try It: GOOGLE COLAB

- Click the Google Colab file used in the video here [6].

- Go to the Colab file and click "File" then "Save a copy in Drive", this will create a new Colab file that you can edit in your own Google Drive account.

- Once you have it saved in your Drive, try to edit the following code to conduct a one-line chi-square test with unequal groups. Remember to match the order of your expected counts to that of your observed counts!

Note: You must be logged into your PSU Google Workspace in order to access the file.

1 2 3 4 5 6 7 8 9 | # Determine the count of each majorcounts = ...# calculate expected counts counts_exp = ...# run the chi-square testresults = ...print('p-value: ', ...) |

Once you have implemented this code on your own, come back to this page to test your knowledge.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

Two-Way Chi-Square Tests

Two-Way Chi-Square Tests

Read It: Two Chi-Square Test

Read It: Two Chi-Square Test

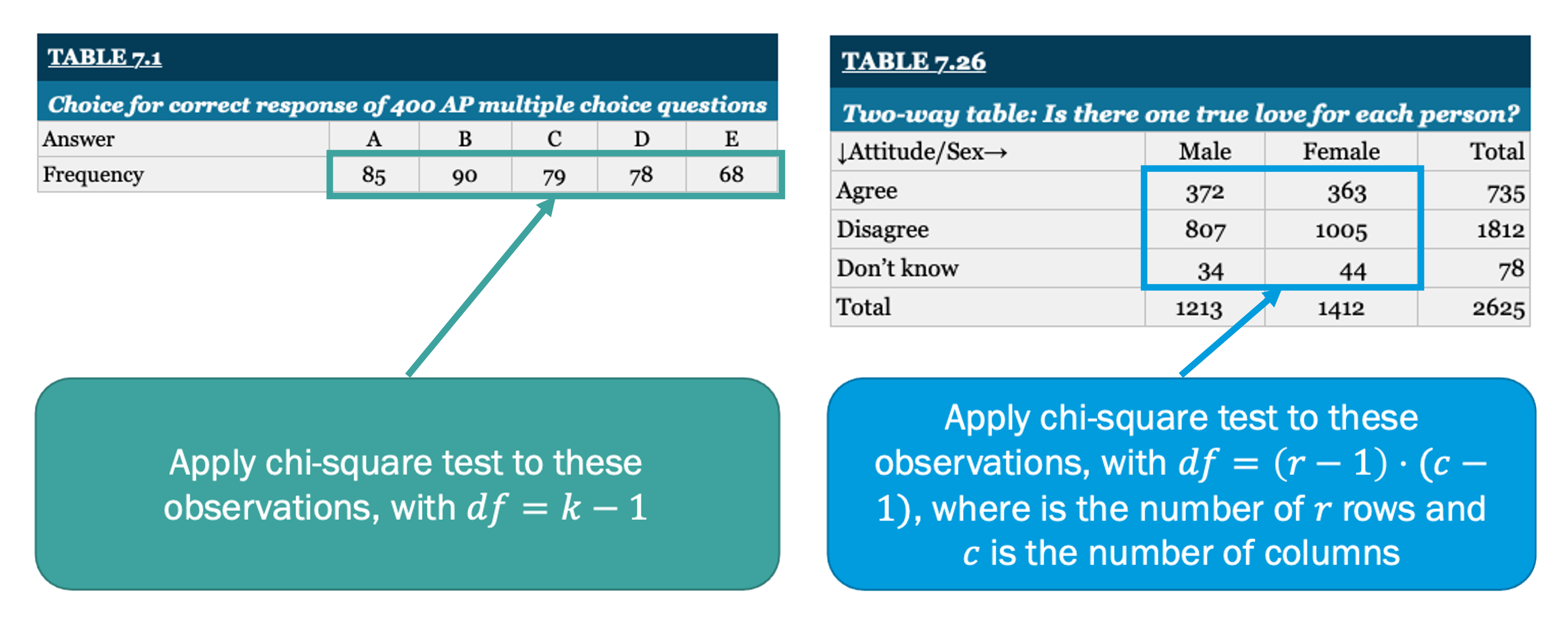

So far in this lesson we have focused on one-way chi-square tests. These tests focus on comparing one categorical variable to known proportions. There is, however, another version of the chi-square test known as the two-way test. Two-way chi-square tests compare two categorical variables, rather than one. Additionally, the degrees of freedom are calculated differently: where is the number of rows and is the number of columns. Below, we demonstrate two tables that might be used in chi-square tests. The table on the left shows a single categorical variable, for which we would conduct a one-way chi-square test. Conversely, the table on the right shows a two-way table (e.g., there are categorical variables along the rows and columns), for which we would need to conduct a two-way chi-square test.

| Table 7.1 | |||||

|---|---|---|---|---|---|

| Choice for correct response of 400 AP multiple choice questions | |||||

| Answer | A | B | C | D | E |

| Frequency | 85 | 90 | 79 | 78 | 68 |

| Table 7.26 | |||

|---|---|---|---|

| Two-way table: Is there one true love for each person? | |||

| Attitude/Sex | Male | Female | Total |

| Agree | 372 | 363 | 735 |

| Disagree | 807 | 1005 | 1812 |

| Don't know | 34 | 44 | 78 |

| Total | 1213 | 1412 | 2625 |

Below, we provide a demonstration of a two-way chi-square test using the card drawing activity from earlier. In particular, we will use the stats.chi2_contingency command from the scipy.stats library. You can read more about this command here [7].

Watch It: Video - Two-Way Test (9:00 minutes)

Watch It: Video - Two-Way Test (9:00 minutes)

Try It: GOOGLE COLAB

Try It: GOOGLE COLAB

- Click the Google Colab file used in the video here [8].

- Go to the Colab file and click "File" then "Save a copy in Drive", this will create a new Colab file that you can edit in your own Google Drive account.

- Once you have it saved in your Drive, use the card drawing simulator linked here [9] to create some data and implement a two-way chi-square test:

Note: You must be logged into your PSU Google Workspace in order to access the file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | # draw cards and populated the table with the number of wins and losses by suittable2['Count'] = [0, 0, # diamonds 0, 0, # hearts 0, 0, # clubs 0, 0] # spades# reorganize the table using pd.crosstabtable3 = pd.crosstab(table2['Suit'], table2['Win?'], values = table2['Count'], aggfunc = np.sum, margins = False)# conduct testresults = ...results# print p-valueprint('p-value: ', ...) |

Once you have implemented this code on your own, come back to this page to test your knowledge.

OPTION 2 : DATACAMP

Try It: OPTION 2 DataCamp - Apply Your Coding Skills

Try It: OPTION 2 DataCamp - Apply Your Coding Skills

Dictionaries are a quick way to create a variable from scratch. However, their functionality is limited, so we will often want to convert those dictionaries into DataFrames. Try to code this conversion in the cell below. Hint: Make sure to import the Pandas library.

Assess It: Check Your Knowledge

Assess It: Check Your Knowledge

Knowledge Check

ANOVA

One-way ANOVA

ANOVA (short for "Analysis of Variance"; more on that later) allows one to compare means across multiple groups. Here, we will just focus on one-way ANOVA, where we compare means across more than two categories of a single categorical variable. Recall that in Lesson 5 we did a comparison of means (difference of means hypothesis testing). Well, ANOVA essentially expands that comparison to more than two groups or categories so that you can efficiently see if there is a significant difference of means across many groups in just one test. Thus, it is similar to a chi-square test, except ANOVA looks at means whereas chi-square testing looks at proportions.

| Hypothesis for a One-Way Chi-square Test are: | Hypothesis for a One-Way ANOVA Test are: |

|---|---|

| Ho: Defines proportions, , for all categories | Ho: All population means, are equal for all categories |

| Ha: At least one is not as specified | Ha: At least one is not equal to the others |

Another way of stating the null and alternative hypotheses for one-way ANOVA is:

Since chi-square tests look at proportions, they are suited for a categorical variable, which would then be summarized by a frequency table.

On the other hand, ANOVA examines means and so is meant for a quantitative variable, which can be summarized into grouped sample means.

Analysis of Variance

The key question that ANOVA answers is: “Are the differences in the mean values significantly different?” Most likely, the sample means from each group do not agree exactly. So, how much disagreement in the sample means is needed to say that there is difference in the population means?

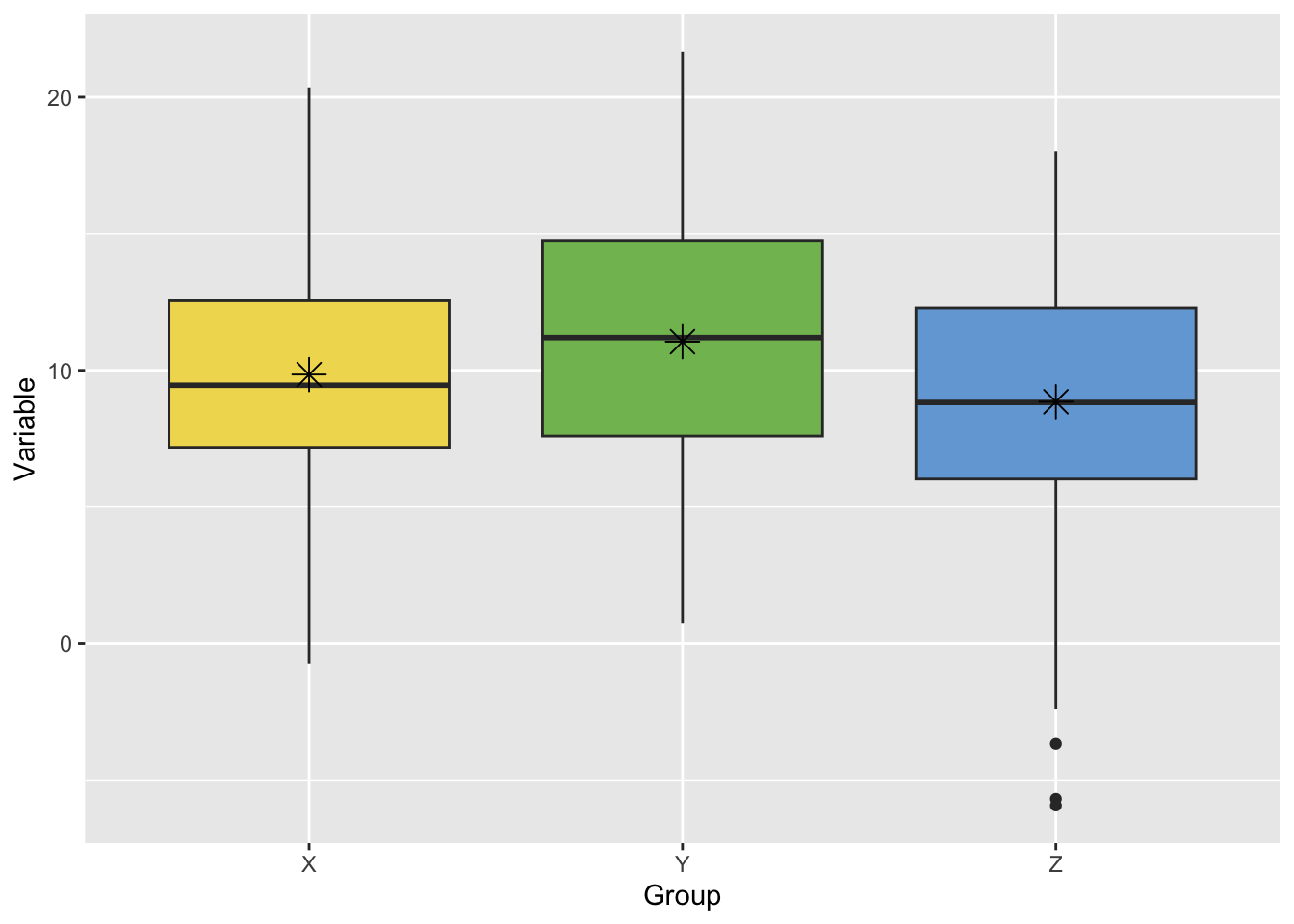

This is exemplified in the figure below, where one may be able to tell that the sample means (represented by asterisks) are different, but it’s hard to tell whether these differences matter much because the samples themselves (the boxplots) overlap so much.

To answer this, we need to consider how much the sample means could vary by random chance alone (that is, from randomly drawing the sample from the population). Therefore, we need to analyze the variance in the sampling distribution of the mean. Continuing with the example above, the figure below shows the corresponding bootstrap distributions for each sample mean (recall from Lesson 4 that the bootstrap distribution approximates the sampling distribution). We can now see that the sample means are distinct from one another. The variances of the sample means (the spread of each boxplot) aren’t so large as to overlap with each other.

FAQ

FAQ

The F-statistic

ANOVA breaks up the total variability of the sample values into two kinds of variability: 1.) the variability within the groups and 2.) the variability between the groups. If the variability between the groups is large compared to the variability within the groups, we can say that the groups have different means. In equation form, this idea is noted:

The terms are defined as:

-

: the total sum of squared deviations. This takes the distance of each data point from the mean of all data, squares that distance (so they’re all positive), and adds them all up. Thus, it measures the TOTAL VARIABILITY of the data.

-

: the sum of squared deviations for groups. This takes the distance of each group’s mean from the mean of all data (ungrouped), squares that distance, and adds the values up. Thus, it measures the VARIABILITY BETWEEN GROUPS.

-

: the sum of squares for error. For each group, this takes the distance of each data point in the group from the mean of that group, squares that distance, and adds them all up. Thus, it measures the VARIABILITY WITHIN THE GROUPS.

Our goal with the ANOVA test is to compare the variability BETWEEN groups to the variability WITHIN groups, however we can’t properly compare to directly since these use different amounts of data. So we need to look at the “mean square” for each:

Here, is the total sample size (number of data values in all groups together) and is the number of groups. The denominators, and represent the “degrees of freedom” (“df” or “dof” for short) for each term. We can then find the F-statistic as:

which effectively compares the variability BETWEEN groups (numerator) to the variability WITHIN groups (denominator). Thus, if the null hypothesis is true and the group means are actually equal, we expect the F-statistic to be about 1. Larger values of the F-statistics indicate a larger relative to , and thus a difference in means (or at least one mean).

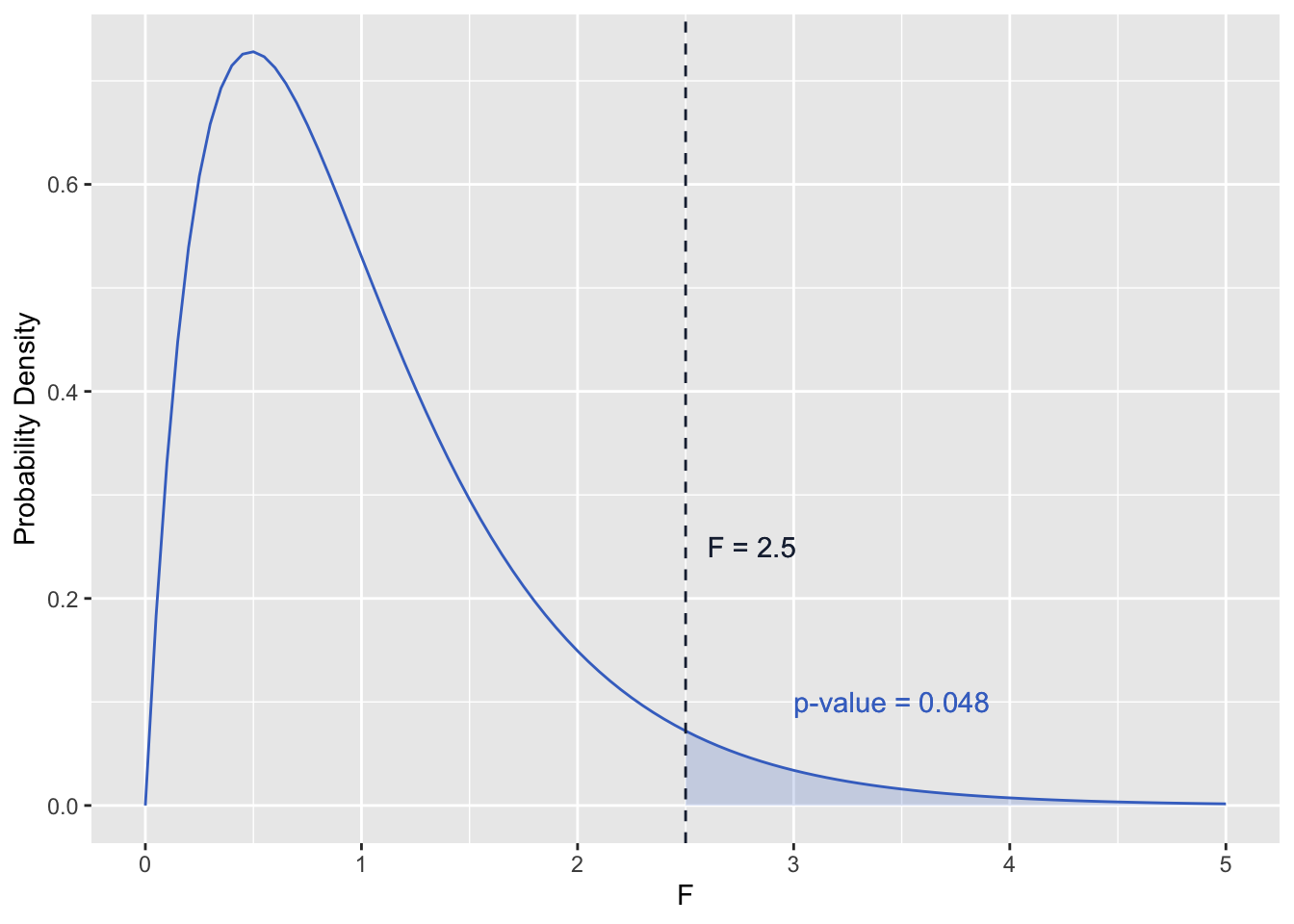

The F-distribution and p-value

The p-value for our ANOVA test depends on the F-statistic above and the degrees of freedom. The degrees of freedom determines the shape of the probability density function for F (an example is pictured below). The p-value is then the area under the probability density function and GREATER THAN the F-statistic calculated from the sample data (shaded area in the figure below).

One-way ANOVA Table

To facilitate an understanding of where the variability in the data is coming from, sometimes the results from an ANOVA test are presented in a table, typically taking the following format:

| Source of Variation | Degrees of Freedom | Sum of Squares | Mean Squares | F | p-value |

|---|---|---|---|---|---|

| BETWEEN | Area under F-distribution and greater than F-statistic | ||||

| WITHIN | |||||

| TOTAL |

FAQ

FAQ

One-way ANOVA: One Line Test

Read It: One Line Test

In this lesson, we won’t go into how to perform ANOVA using randomization distributions, but instead we’ll rely on the one-way ANOVA test function that available in scipy.stats, which is called f_oneway. The basic usage of this function requires one to input an argument for each group or category, and that argument is the series of values belonging to each group. Following the example above, we’d have:

import scipy.stats as stats

A = [5, 3]

B = [4, 3]

C = [6]

stats.f_oneway(A, B, C)Note that we don’t have to calculate the mean for each group; f_oneway does that for us and we just need to supply the data values. More samples can be added in the analysis, just by including them as additional arguments separated by commas. Also, be sure to remove any NA/NaN values first!

The output from f_oneway is the F-statistic and the associated p-value.

Video: L07V08 ANOVA (29:42)

The code for the ANOVA example in the video can be accessed here [11]. Make sure to sign in with your PSU email.

FAQ

FAQ

One-Way ANOVA vs. Multiple Comparisons

One-way ANOVA vs. Multiple Comparisons

One-way ANOVA is useful when we have three or more groups whose means we want to compare simultaneously. For two groups, we can resort to our usual two-sample comparison of means hypothesis test (Lesson 5). We could, instead of ANOVA, perform multiple two-sample comparison of means hypothesis tests, one for every possible pair of groups. For example, with three groups, 1, 2, and 3, we would have the following set of hypotheses to test:

| Test 1 | |

| Test 2 | |

| Test 3 |

In fact, the number of paired tests we would need to perform for groups is equal to:

So for 4 groups, we would need 6 tests, and for 5 groups, we would need 10 tests. The number of tests we need can be quite cumbersome if we have several or more groups. Thus, ANOVA is an efficient way to test for equality in means across many groups, as it just has the one test:

| All population means, , are equal for all categories |

| At least one is not equal to the others |

Type I Error

Furthermore, the multiple pairwise testing approach would increase the chance of committing Type I error (falsely rejecting the null hypothesis) because there would be multiple “attempts” of generating a p-value that falls below the significance level by random chance. For example, with the three tests above, at the 0.05 significance level, the expected number of tests that commit Type I error is ; or, in other words, there’s a ~15% chance of having at least one test falsely reject the null.

Which Group?

Note that the ANOVA test doesn’t indicate which group, or groups, have means that aren’t equal to the others. The test just indicates that there is some significant inequality in the means. Usually, one can identify the standout group(s) through visualization (for example, a bar chart or comparative boxplots). If it is still not clear which group(s) are different, multiple comparison of means tests can be performed.

FAQ

FAQ

Summary and Final Tasks

Summary and Final Tasks

Summary

This lesson has covered two types of hypotheses tests to use when you have more than two samples or groups:

- Chi-square test: for samples/groups of a categorical variable

- ANOVA: for samples/groups of a quantitative variable

Thus, chi-square tests compare proportions across the groups, and ANOVA compares means, and the hypotheses for these tests are:

| Hypothesis | Chi-Square Test | One-way ANOVA |

|---|---|---|

| Null | Defines proportions, , for all categories | All population means, , are equal for all categories |

| Alternative | At least one is not as specified | At least one is not equal to the others |

Assess it: Check Your Knowledge Quiz

Assess it: Check Your Knowledge Quiz

Reminder - Complete all of the Lesson 7 tasks!

You have reached the end of Lesson 7! Double-check the to-do list on the Lesson 7 Overview page to make sure you have completed all of the activities listed there before you begin Lesson 8.