Lessons

Lesson 1: Introduction to GIS modeling and Python

The links below provide an outline of the material for this lesson. Be sure to carefully read through the entire lesson before returning to Canvas to submit your assignments.

Lesson 1 Overview

Welcome to Geography 485. Over the next ten weeks, you'll work through four lessons and a final project dealing with ArcGIS automation in Python. Each lesson will contain readings, examples, and projects. Since the lessons are two weeks long, you should plan between 20 - 30 hours of work to complete them, although this number may vary depending on your prior programming experience. See the Course Schedule section of this syllabus, below, for a schedule of the lessons and course projects.

As with GEOG 483 and GEOG 484, the lessons in this course are project-based with key concepts embedded within. However, because of the nature of computer programming, there is no way this course can follow the step-by-step instructional design of the previous courses. You will probably find the course to be more challenging than our courses on GIS fundamentals. For that reason, it is more important than ever that you stay on schedule and take advantage of the course message boards and private email. It's quite likely that you will get stuck somewhere during the course, so before getting hopelessly frustrated, please seek help from me or your classmates!

I hope that by now that you have reviewed our Orientation and Syllabus for an important course site overview. Before we begin our first project, let me share some important information about the textbook and a related Esri course.

Textbook and Readings

The textbook for this course is Python Scripting for ArcGIS Pro by Paul A. Zandbergen. As you read through Zandbergen's book, you'll see material that closely parallels what is in the Geog 485 lessons. This isn't necessarily a bad thing; when you are learning a subject like programming, it can be helpful to have the same concept explained from two angles.

My advice about the readings is this: Read the material on the Geog 485 lesson pages first. If you feel like you have a good understanding from the lesson pages, you can skim through some of the more lengthy Zandbergen readings. If you struggled with understanding the lesson pages, you should pay close attention to the Zandbergen readings and try some of the related code snippets and exercises. I suggest you plan about 1 - 2 hours per week of reading if you are going to study the chapters in detail.

In all cases, you should get a copy of the textbook because it is a relevant and helpful reference.

Note:

The Zandbergen textbook is up to the 3rd Edition as of Summer 2024. The free copy of the book available through the PSU library is the 2nd Edition. Differences between the two editions are relatively minor and you may assume that the section numbers referenced here in the lessons are applicable to both editions unless otherwise noted.

You may see that in Esri's documentation, shapefiles are also referred to as "feature classes." When you see the term "feature class," consider it to mean a vector dataset that can be used in ArcGIS.

Another type of standalone dataset dating back to the early days of ArcGIS is the ArcInfo coverage. Like the shapefile, the coverage consists of several files that work together. Coverages are definitely an endangered species, but you might encounter them if your organization used ArcInfo Workstation in the past.

Esri Virtual Campus Course Python for Everyone

There is a free Esri Virtual Campus course, Python for Everyone [1], that introduces a lot of the same things you'll learn this term in Geog 485. Python for Everyone consists of a series of short videos and exercises, some of which might help toward the projects. If you want to get a head start, or you want some reinforcement of what we're learning from a different point of view, it would be worth your time to complete that Virtual Campus course.

All you need in order to access the course is an Esri Global Account, which you can create for free. You do not need to obtain an access code from Penn State.

The course moves through ideas very quickly and covers a range of concepts that we'll spend 10 weeks studying in depth, so don't worry if you don't understand it all immediately or if it seems overwhelming. You might find it helpful to quickly review the course again near the end of Geog 485 to review what you've learned.

Questions?

If you have any questions now or at any point during this week, please feel free to post them to the Lesson 1 Discussion Forum. (To access the forums, return to Canvas via the Canvas link. Once in Canvas, you can navigate to the Modules tab, and then scroll to the Lesson 1 Discussion Forum.) While you are there, feel free to post your own responses if you are able to help a classmate.

Now, let's begin Lesson 1.

Lesson 1 Checklist

This lesson is two weeks in length. (See the Calendar in Canvas for specific due dates.) To finish this lesson, you must complete the activities listed below. You may find it useful to print this page so that you can follow along with the directions.

- Download the Lesson 1 data [2] and extract it to C:\PSU\Geog485\Lesson1 or a similar path that is easy to remember.

- Work through the online sections of Lesson 1.

- Read the assigned pages from the Zandbergen textbook. In the online lesson pages, I have inserted instructions on the sections to read from Zandbergen when it is most appropriate to read them.

- Complete Project 1, Part I and submit the deliverables to the course drop box in Canvas.

- Complete Project 1, Part II and submit the deliverables to the course drop box in Canvas.

- Complete the Lesson 1 Quiz in Canvas.

Do items 1 - 3 (including any of the practice exercises you want to attempt) during the first week of the lesson. You will need the second week to concentrate on the project and quiz.

Lesson objectives

By the end of this lesson, you should:

- be able to create automated workflows in ArcGIS Pro ModelBuilder;

- be familiar with the PyScripter development environment;

- know some of Python’s most important basic data types and how to use variables in Python scripts;

- be familiar with the basic concepts of object oriented programming (e.g. classes & inheritance);

- be able to use arcpy tool functions to achieve basic spatial analysis tasks; and

- know how to create a simple script tool and pass a parameter to a script.

1.1.1 The need for GIS automation

A geographic information system (GIS) can manipulate and analyze spatial datasets with the purpose of solving geographic problems. GIS analysts perform all kinds of operations on data to make it useful for solving a focused problem. This includes clipping, reprojecting, buffering, merging, mosaicking, extracting subsets of the data, and hundreds of other operations. In the ArcGIS software used in this course, these operations are known as geoprocessing and they are performed using tools.

Successful GIS analysis requires selecting the most appropriate tools to operate on your data. ArcGIS uses a toolbox metaphor to organize its suite of tools. You pick the tools you need and run them in the proper order to make your finished product.

Suppose you’re responsible for selecting sites for a chain restaurant. You might use one tool to select land parcels along a major thoroughfare, another tool to select parcels no smaller than 0.25 acres, and other tools for other selection criteria. If this selection process were limited to a small area, it would probably make sense to perform the work manually.

However, let’s suppose you’re responsible for carrying out the same analysis for several areas around the country. Because this scenario involves running the same sequence of tools for several areas, it is one that lends itself well to automation. There are several major benefits to automating tasks like this:

- Automation makes work easier. Once you automate a process, you don't have to put in as much effort remembering which tools to use or the proper sequence in which they should be run.

- Automation makes work faster. A computer can open and execute tools in sequence much faster than you can accomplish the same task by pointing and clicking.

- Automation makes work more accurate. Any time you perform a manual task on a computer, there is a chance for error. The chance multiplies with the number and complexity of the steps in your analysis, as well as the fatigue incurred by repeating the task many times. In contrast, once an automated task is configured, a computer can be trusted to perform the same sequence of steps every time for a potentially endless number of cycles.

The ArcGIS platform provides several ways for users to automate their geoprocessing tasks. These options differ in the amount of skill required to produce the automated solution and in the range of scenarios that each can address. The text below touches briefly on these automation options, in order from requiring the least coding skill to the most.

The first option is to construct a model using ModelBuilder. ModelBuilder is an interactive program that allows the user to “chain” tools together, using the output of one tool as input in another. Perhaps the most attractive feature of ModelBuilder is that users can automate rather complex GIS workflows without the need for programming. You will learn how to use ModelBuilder early in this course.

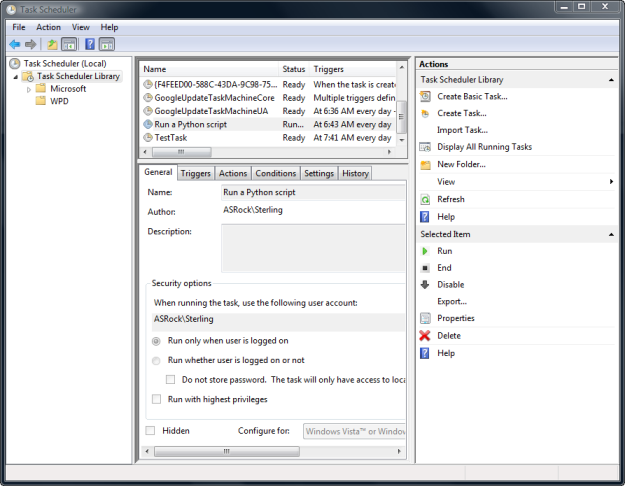

Some automation tasks require greater flexibility than is offered by ModelBuilder, and for these scenarios it's recommended that you write short computer programs, or scripts. The bulk of this course is concerned with script writing.

A script typically executes some sequential procedure of steps. Within a script, you can run GIS tools individually or chain them together. You can insert conditional logic in your script to handle cases where different tools should be run depending on the output of the previous operation. You can also include iteration, or loops, in a script to repeat a single action as many times as needed to accomplish a task.

There are special scripting languages for writing scripts, including Python, JScript, and Perl. Often these languages have more basic syntax and are easier to learn than other languages such as C, Java, or Visual Basic.

Although ArcGIS supports various scripting languages for working with its tools, Esri emphasizes Python in its documentation and includes Python with the ArcGIS Desktop and Pro installations. In this course, we’ll be working strictly with Python for this reason, as well as the fact that Python can be used for many other file and data manipulation tasks outside of ArcGIS. You’ll learn the basics of the Python language, how to write a script, and how to manipulate and analyze GIS data using scripts. Finally, you’ll apply your new Python knowledge to a final project, where you write a script of your choosing that you may be able to apply directly to your work.

A more recently developed automation option available on the ArcGIS platform is the ArcGIS API (Application Programming Interface) for Python. This is an environment in which Python scripting is better integrated with Esri's cloud- and server-based technologies (ArcGIS Online, Portal for ArcGIS, ArcGIS Enterprise). Code written to interact with the Python API is often written in a "notebook" environment, such as Jupyter Notebook. In a notebook environment, code can be executed in a stepwise fashion, with intermediate results displayed in between the Python statements. The use of the Python API in a Jupyter Notebook environment is a topic in our Advanced Python class, GEOG 489.

For geoprocessing tasks that require support for user interaction with the map or other UI elements, the ArcGIS Pro SDK (Software Development Kit) offers the ability to add custom tools to the Pro interface. The Pro SDK requires programming in the .NET framework using a .NET language such as Visual Basic .NET or C#. Working with this SDK's object model provides greater flexibility in terms of what can be built, as compared to writing Python scripts around their geoprocessing framework. The tradeoff is a higher level of complexity involved in the coding.

Finally, developers who want to create their own custom GIS applications, typically focused on delivering much narrower functionality than the one-size-fits-all ArcGIS Pro, can develop apps using the ArcGIS Maps SDKs (previously called Runtime SDKs). The Maps SDKs make it possible to author apps for Windows, Mac, or Linux desktop machines, as well as for iOS and Android mobile devices, again involving a greater level of effort than your typical Python geoprocessing script. In the past, there was a native version for MacOS but that has been retired. Instead, programmers can use the Maps SDK for Java version to develop for MacOS.

This first lesson will introduce you to concepts in both model building and script writing. We’ll start by just getting familiar with how tools run in ArcGIS and how you can use those tools in the ModelBuilder interface. Then, we’ll cover some of the basics of Python and see how the tools can be run within scripts.

1.2.1 Exploring the toolbox

The ArcGIS software that you use in this course contains hundreds of tools that you can use to manipulate and analyze GIS data. Back before ArcGIS had a graphical user interface (GUI), people would access these tools by typing commands. Nowadays, you can point and click your way through a whole hierarchy of toolboxes using the Catalog window in ArcGIS Pro.

Although you may have seen them before, let’s take a quick look at the toolboxes:

- Open a new or existing project in ArcGIS Pro.

- If the Geoprocessing pane isn't visible, click the Analysis tab, then click Tools.

- In the Geoprocessing pane, click the Toolboxes tab heading. Notice that the tools are organized into toolboxes and toolsets. For example, the IDW and Spline tools can be found in the Interpolation toolset within the Spatial Analyst toolbox. Sometimes it’s faster to use the Find Tools box at the top of the Geoprocessing tab to find the tool you need instead of browsing this tree.

-

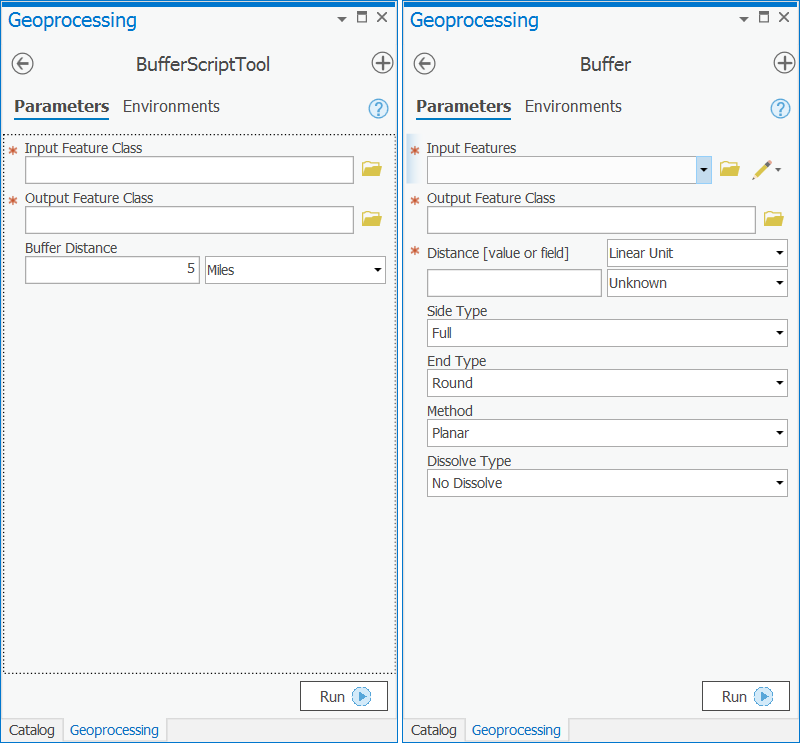

Let’s examine a tool. Expand Analysis Tools > Proximity > Buffer, and double-click the Buffer tool to open it.

You've probably seen this tool in past courses, but this time, really pay attention to the components that make up the user interface. Specifically, you’re looking at a dialog with many fields. Each geoprocessing tool has required inputs and outputs. Those are indicated by the red asterisks. They represent the minimum amount of information you need to supply in order to run a tool. For the Buffer tool, you’re required to supply an input features location (the features that will be buffered) and a buffer distance. You’re also required to indicate an output feature class location (for the new buffered features).

Many tools also have optional parameters. You can modify these if you want, but if you don’t supply them, the tool will still run using default values. For the Buffer tool, optional parameters are the Side Type, End Type, Method, and Dissolve Type. Optional parameters are typically specified after required parameters.

-

Hover your mouse over any of the tool parameters. You should see a blue "info" icon to the left of the parameter. Moving your mouse over that icon will show a brief description of the parameter in a pop-out window.

If you’re not sure what a parameter means, this is a good way to learn. For example, viewing the pop-out documentation for the End Type parameter will show you an explanation of what this parameter means and list the two options: Round and Flat.

If you need even more help, each tool is more expansively documented in the ArcGIS Pro web-based help system. You can access a tool's documentation in this system by clicking on the blue ? icon in the upper-right of the tool dialog, which will open the help page in your default web browser.

- Open the web-based help page for the Buffer tool. As mentioned, the help page will provide extensive documentation of the tool's usage. In the upper right of the help page, you should see a list of links that are shortcuts to various sections of the tool's documentation.

- Click the Parameters link to jump to that section. There you should find that the information is divided between two tabs (Dialog and Python), with the Dialog tab displayed by default. The Dialog tab focuses on providing help to those who are executing the tool through the ArcGIS Pro GUI, while the Python tab focuses on providing help to those who are invoking it through a Python script.

- Click on the Python tab, since that's our focus in this class. You should see the name, a description, and the data type associated with each of the tool's parameters. That information is certainly helpful, but often even more helpful is the Code sample section that appears below the parameter list. Every tool's help page has these programming examples showing ways to run the tool from within a script, and they will be extremely valuable to you as you complete the assignments in this course.

1.2.2 Environments for accessing tools

You can access ArcGIS geoprocessing tools in several different ways:

- Sometimes you just need to run a tool once, or you want to experiment with a tool and its parameters. In this case, you can open the tool directly from the Geoprocessing pane and use the tool’s graphical user interface (GUI, pronounced gooey) to fill in the parameters.

- ModelBuilder is also a GUI application where you can set up tools to run in a given sequence, using the output of one tool as input to another tool.

- If you’re familiar with the tool and want to use it quickly in Pro, you may prefer the Python window approach. You type the tool name and required parameters into a command window. You can use this window to run several tools in a row and declare variables, effectively doing simple scripting.

- If you want to run the tool automatically, repeatedly, or as part of a greater logical sequence of tools, you can run it from a script. Running a tool from a script is the most powerful and flexible option.

We’ll start with the simplest of these cases, running a tool from its GUI, and work our way up to scripting.

1.2.3 Running a tool from its GUI

Let’s start by opening a tool from the Catalog pane and running it using its graphical user interface (GUI).

- If, by chance, you still have the Buffer tool open from the previous section, close it for now so you can add some data.

- Create a folder on your machine at C:\PSU\Geog485. If you use a different path, be sure to substitute your path in the following examples.

- Download the Lesson 1 data [2] and extract Lesson1.zip into your new folder so that the data is under the path C:\PSU\Geog485\Lesson1. This folder contains a variety of datasets you will use throughout the lesson.

- Open a new project in Pro if you don't have one open already.

- Click the Add Data button

and browse to the data you just extracted. Add the us_boundaries and us_cities shapefiles.

and browse to the data you just extracted. Add the us_boundaries and us_cities shapefiles. - Open the Geoprocessing pane if necessary and browse to the Buffer tool as you did in the previous section.

- Double-click the Buffer tool to open it.

-

Examine the first required parameter: Input Features. Click the Browse button

and browse to the path of your cities dataset C:\PSU\Geog485\Lesson1\us_cities.shp. Notice that once you do this, a name is automatically supplied for the Output Feature Class (and the output path is the same as the input features). The software does this for your convenience only, and you can change the name/path if you want.

and browse to the path of your cities dataset C:\PSU\Geog485\Lesson1\us_cities.shp. Notice that once you do this, a name is automatically supplied for the Output Feature Class (and the output path is the same as the input features). The software does this for your convenience only, and you can change the name/path if you want.A more convenient way to supply the Input Features is to just select the cities map layer from the dropdown menu. This dropdown automatically contains all the layers in your map. However, in this example, we browsed to the path of the data because it’s conceptually similar to how we’ll provide the paths in the command line and scripting environments.

- Now you need to supply the Distance parameter for the buffer. For this run of the tool, set a Linear unit of 5 miles. When we run the tool from the other environments, we’ll make the buffer distance slightly larger, so we know that we got distinct outputs.

- The rest of the parameters are optional. The Side Type parameter applies only to lines and polygons, so it is not even available for setting in the GUI environment when working with city points. However, change the Dissolve Type to Dissolve all output features into a single feature. This combines overlapping buffers into a single polygon.

- Leave the Method set to Planar. We're not going to take the earth's curvature into account for this simple example.

- Click Run to execute the tool.

- The tool should take just a few seconds to complete. Examine the output that appears on the map, and do a “sanity check” to make sure that buffers appear around the cities, and they appear to be about 5 miles in radius. You may need to zoom in to a single state in order to see the buffers.

- Click on Pro's Analysis tab, then on History (in the Geoprocessing button group). This will open the History tab in Pro's Catalog pane, which lists messages about successes or failures of all recent tools that you've run.

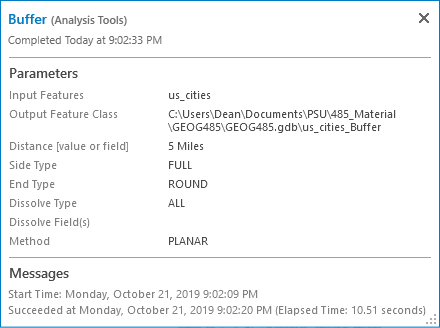

-

Hover over the Buffer tool entry in this list to see a pop-out window. This window lists the tool parameters, the time of completion, and any problems that occurred when running the tool (see Figure 1.1). These messages can be a big help later when you troubleshoot your Python scripts. The text of these messages is available whether you run the tool from the GUI, from the Python window in Pro, or from scripts.

1.2.4 Modeling with tools

When you work with geoprocessing, you’ll frequently want to use the output of one tool as the input into another tool. For example, suppose you want to find all fire hydrants within 200 meters of a building. You would first buffer the building, then use the output buffer as a spatial constraint for selecting fire hydrants. The output from the Buffer tool would be used as an input to the Select by Location tool.

A set of tools chained together in this way is called a model. Models can be simple, consisting of just a few tools, or complex, consisting of many tools and parameters and occasionally some iterative logic. Whether big or small, the benefit of a model is that it solves a unique geographic problem that cannot be addressed by one of the “out-of-the-box” tools.

In ArcGIS, modeling can be done either through the ModelBuilder graphical user interface (GUI) or through code, using Python. To keep our terms clear, we’ll refer to anything built in ModelBuilder as a “model” and anything built through Python as a “script.” However, it’s important to remember that both things are doing modeling.

1.3.1 Why learn ModelBuilder?

ModelBuilder is Esri’s graphical interface for making models. You can drag and drop tools from the Catalog pane into the model and “connect” them, specifying the order in which they should run.

Although this is primarily a programming course, we’ll spend some time in ModelBuilder during the first lesson for two reasons:

- ModelBuilder is a nice environment for exploring the ArcGIS tools, learning how tool inputs and outputs are used, and visually understanding how GIS modeling works. When you begin using Python, you will not have the same visual assistance to see how the tools you’re using are connected, but you may still want to draw your model on a whiteboard in a similar fashion to what you saw in ModelBuilder.

- ModelBuilder can frequently reduce the amount of Python coding that you need to do. If your GIS problem does not require advanced conditional and iterative logic, you may be able to get your work done in ModelBuilder without writing a script. ModelBuilder also allows you to export any model to Python code, so if you get stuck implementing some tools within a script, it may be helpful to make a simple working model in ModelBuilder, then export it to Python to see how ArcGIS would construct the code. (Exporting a complex model is not recommended for beginners due to the verbose amount of code that ModelBuilder tends to create when exporting Python).

1.3.2 Opening and exploring ModelBuilder

Let’s get some practice with ModelBuilder to solve a real scenario. Suppose you are working on a site selection problem where you need to select all areas that fall within 10 miles of a major highway and 10 miles of a major city. The selected area cannot lie in the ocean or outside the United States. Solving the problem requires that you make buffers around both the roads and the cities, intersect the buffers, then clip to the US outline. Instead of manually opening the Buffer tool twice, followed by the Intersect tool, then the Clip tool, you can set this up in ModelBuilder to run as one process.

- Create a new Pro project called Lesson1Practice in the C:\PSU\Geog485\Lesson1 folder.

- Add the us_cities, us_roads, and us_boundaries shapefiles from the Lesson 1 data folder that you configured previously in this lesson to a new map.

- In the Catalog pane, right-click your Lesson1Practice toolbox and click New > Model. You’ll see ModelBuilder appear in the middle of the Pro window.

- Under the ModelBuilder tab, click the Properties button.

- For the Name, type SuitableLand and for the Label, type Find Suitable Land. The label is what everyone will see when they open your tool from the Catalog. That’s why it can contain spaces. The name is what people will use if they ever run your model from Python. That’s why it cannot contain spaces.

-

Click OK to dismiss the model Properties dialog.

You now have a blank canvas on which you can drag and drop the tools. When creating a model (and when writing Python scripts), it’s best to break your problem into manageable pieces. The simple site selection problem here can be thought of as four steps:

- Buffer the cities

- Buffer the roads

- Intersect the buffers

- Clip to the US boundary

Let’s tackle these items one at a time, starting with buffering the cities.

- With ModelBuilder still open, go to the Catalog pane > Geoprocessing tab and browse to Analysis Tools > Proximity.

-

Click the Buffer tool and drag it onto the ModelBuilder canvas. You’ll see a gray rectangular box representing the buffer tool and a gray oval representing the output buffers. These are connected with a line, showing that the Buffer tool will always produce an output data set.

In ModelBuilder, tools are represented with boxes and variables are represented with ovals. Right now, the Buffer tool, at center, is gray because you have not yet supplied the required parameters. Once you do this, the tool and the variable will fill in with color.

- In your ModelBuilder window, double-click the Buffer box. The tool dialog here is the same as if you had opened the Buffer directly from the Catalog pane. This is where you can supply parameters for the tool.

- For Input Features, browse to the path of your us_cities shapefile on disk. The Output Feature Class will populate automatically.

- For Distance [value or field], enter 10 miles.

- For Dissolve Type, select Dissolve all output features..., then click OK to close the Buffer dialog. The model elements (tools and variables) should be filled in with color, and you should see a new element to the left of the tool representing the input cities feature class.

-

An important part of working with ModelBuilder is supplying clear labels for all the elements. This way, if you share your model, others can easily understand what will happen when it runs. Supplying clear labels also helps you remember what the model does, especially if you haven’t worked with the model for a while.

In ModelBuilder, right-click the us_cities.shp element (blue oval, at far left) and click Rename. Name this element "US Cities."

- Right-click the Buffer tool (yellow-orange box, at center) and click Rename. Name this “Buffer the cities.”

-

Right-click the buffer output element (green oval, at far right) and click Rename. Name this “Buffered cities.” Your model should look like this.

Figure 1.2 The model's appearance following step 15, above.

Figure 1.2 The model's appearance following step 15, above. - Save your model (ModelBuilder tab > Save). This is the kind of activity where you want to save often.

-

Practice what you just learned by adding another Buffer tool to your model. This time, configure the tool so that it buffers the us_roads shapefile by 10 miles. Remember to set the Dissolve type to Dissolve all output features... and to add meaningful labels. Your model should now look like this.

Figure 1.3 The model's appearance following step 17, above.

Figure 1.3 The model's appearance following step 17, above. - The next task is to intersect the buffers. In the Catalog pane's list of toolboxes, browse to Analysis Tools > Overlay and drag the Intersect tool onto your model. Position it to the right of your existing Buffer tools.

- Here’s the pivotal moment when you chain the tools together, setting the outputs of your Buffer tools as the inputs of the Intersect tool. Click the Buffered cities element and drag over to the Intersect element. If you see a small menu appear, click Input Features to denote that the buffered cities will act as inputs to the Intersect tool. An arrow will now point from the Buffered cities element to the Intersect element.

- Use the same process to connect the Buffered roads to the Intersect element. Again, if prompted, click Input Features.

-

Rename the output of the Intersect operation "Intersected buffers." If the text runs onto multiple lines, you can click and drag the edges of the element to resize it. You can also rearrange the elements on the page however you like. Because models can get large, ModelBuilder contains several navigation buttons for zooming in and zooming to the full extent of the model in the View button group on the ribbon. Your model should now look like this:

Figure 1.4 The model's appearance following step 21, above.

Figure 1.4 The model's appearance following step 21, above. - The final step is to clip the intersected buffers to the outline of the United States. This prevents any of the selected area from falling outside the country or in the ocean. In the Catalog pane, browse to Analysis Tools > Extract and drag the Clip tool into ModelBuilder. Position this tool to the right of your existing tools.

- As you did above, set the Intersected buffers as an input to the Clip tool, choosing Input Features when prompted. Notice that even when you do this, the Clip tool is not ready to run (it’s still shown as a gray rectangle, located at right). You need to supply the clip features, which is the shape to which the buffers will be clipped.

- In ModelBuilder (not in the Catalog pane), double-click the Clip tool. Set the Clip Features by browsing to the path of us_boundaries.shp, then click OK to dismiss the dialog. You’ll notice that a blue oval appeared, representing the Clip Features (US Boundaries).

-

Set meaningful labels for the remaining tools as shown below. Below is an example of how you can label and arrange the model elements.

Figure 1.5 The completed model with the clip tool included.

Figure 1.5 The completed model with the clip tool included. - Double-click the final output element (named "Suitable land" in the image above) and set the path to C:\PSU\Geog485\Lesson1\suitable_land.shp. This is where you can expect your model output feature class to be written to disk.

- Right-click Suitable land and click Add to display.

- Save your model again.

- Test the model by clicking the Run button.

You’ll see the by-now-familiar geoprocessing message window that will report any errors that may occur. ModelBuilder also gives you a visual cue of which tool is running by turning the tool red. (If the model crashes, try closing ModelBuilder and running the model by double-clicking it from the Catalog pane. You'll get a message that the model has no parameters. This is okay [and true, as you'll learn below]. Go ahead and run the model anyway.)

You’ll see the by-now-familiar geoprocessing message window that will report any errors that may occur. ModelBuilder also gives you a visual cue of which tool is running by turning the tool red. (If the model crashes, try closing ModelBuilder and running the model by double-clicking it from the Catalog pane. You'll get a message that the model has no parameters. This is okay [and true, as you'll learn below]. Go ahead and run the model anyway.) -

When the model has finished running (it may take a while), examine the output on the map. Zoom into Washington state to verify that the has Clip worked on the coastal areas. The output should look similar to this.

Figure 1.6 The model's output in ArcGIS Pro.

Figure 1.6 The model's output in ArcGIS Pro.

That’s it! You’ve just used ModelBuilder to chain together several tools and solve a GIS problem.

You can double-click this model anytime in the Catalog pane and run it just as you would a tool. If you do this, you’ll notice that the model has no parameters; you can’t change the buffer distance or input features. The truth is, our model is useful for solving this particular site-selection problem with these particular datasets, but it’s not very flexible. In the next section of the lesson, we’ll make this model more versatile by configuring some of the variables as input and output parameters.

1.3.3 Model parameters

Most tools, models, and scripts that you create with ArcGIS have parameters. Input parameters are values with which the tool (or model or script) starts its work, and output parameters represent what the tool gives you after its work is finished.

A tool, model, or script without parameters is only good in one scenario. Consider the model you just built that used the Buffer, Intersect, and Clip tools. This model was hard-coded to use the us_cities, us_roads, and us_boundaries shapefiles and output a shapefile called suitable_land. In other words, if you wanted to run the model with other datasets, you would have to open ModelBuilder, double-click each element (US Cities, US Roads, US Boundaries, and Suitable land), and change the paths that were written directly into the model. You would have to follow a similar process if you wanted to change the buffer distances, too, since those were hard-coded to 10 miles.

Let’s modify that model to use some parameters, so that you can easily run it with different datasets and buffer distances.

- If it's not open already, open the project C:\PSU\Geog485\Lesson1\Lesson1Practice.aprx in ArcGIS Pro.

- In the Catalog window, find the model you created earlier in the lesson, which should be under Toolboxes > Lesson1Practice.atbx > Find Suitable Land.

- Right-click the model Find Suitable Land and click Copy. Now, right-click the Lesson1Practice toolbox and click Paste. This creates a new copy of your model that you can work with to create model parameters. Using a copy of the model like this allows you to easily start over if you make a mistake.

- Rename the copy of your model Find Suitable Land With Parameters or something similar.

- In your Lesson 1 toolbox, right-click Find Suitable Land With Parameters and click Edit. You'll see the model appear in ModelBuilder.

- Right-click the element US Cities (should be a blue oval) and click Parameter. This means that whoever runs the model must specify the cities dataset to use before the model can run.

- You need a more general name for this parameter now, so right-click the US Cities element and click Rename. Change the name to just "Cities."

-

Even though you "parameterized" the cities, your model still defaults to using the C:\PSU\Geog485\Lesson1\us_cities.shp dataset. This isn't going to make much sense if you share your model or toolbox with other people because they may not have the same us_cities shapefile, and even if they do, it probably won't be sitting at the same path on their machines.

To remove the default dataset, double-click the Cities element and delete the path, then click OK. Some of the elements in your model may turn gray. This signifies that a value has to be provided before the model can successfully run.

- Now you need to create a parameter for the distance of the buffer to be created around the cities. Right-click the element that you named "Buffer the cities" and click Create Variable > From Parameter > Distance [value or field].

- The previous step created a new element Distance [value or field]. Rename this element to "Cities buffer distance" and make it a model parameter. (Review the steps above if you're unsure about how to rename an element or make it a model parameter.) For this element, you can leave the default at 10 miles. Your model should look similar to this, although the title bar of your window may vary:

Figure 1.7 The "Find Suitable Land With Parameters" model following Step 10, above, and showing two parameters.

Figure 1.7 The "Find Suitable Land With Parameters" model following Step 10, above, and showing two parameters.

Click link to expand for a text description of Figure 1.7. - Repeating what you learned above, rename the US Roads element to "Roads," make it a model parameter, and remove the default value.

- Repeating what you learned above, make a parameter for the Roads buffer distance. Leave the default at 10 miles.

- Repeating what you learned above, rename the US Boundaries element to Boundaries, make it a model parameter, and remove the default value. Your model should look like this (notice the five parameters indicated by "P"s):

Figure 1.8 The "Find Suitable Land With Parameters" model following Step 13, above, and showing five parameters.

Figure 1.8 The "Find Suitable Land With Parameters" model following Step 13, above, and showing five parameters.

Click link to expand for a text description of Figure 1.8. - Save and close your model.

-

Double-click your model Lesson 1 > Find Suitable Land With Parameters and examine the tool dialog. It should look similar to this:

Figure 1.9 The model interface, or tool dialog, for the model "Find Suitable Land With Parameters."

Figure 1.9 The model interface, or tool dialog, for the model "Find Suitable Land With Parameters."People who run this model will be able to browse to any cities, roads, and boundaries datasets, and will be able to control the buffer distance. The red asterisks indicate parameters that must be supplied with valid values before the model can run.

- Test your model by supplying the us_cities, us_roads, and us_boundaries shapefiles for the model parameters. If you like, you can try changing the buffer distance.

The above exercise demonstrated how you can expose values as parameters using ModelBuilder. You need to decide which values you want the user to be able to change and designate those as parameters. When you write Python scripts, you'll also need to identify and expose parameters in a similar way.

1.3.4 Advanced geoprocessing and ModelBuilder concepts

By now, you've had some practice with ModelBuilder, and you're about ready to get started with Python. This page of the lesson contains some optional advanced material that you can read about ModelBuilder. This is particularly helpful if you anticipate using ModelBuilder frequently in your employment. Some of the items are common to the ArcGIS geoprocessing framework, meaning that they also apply when writing Python scripts with ArcGIS.

Managing intermediate data

GIS analysis sometimes gets messy. Most of the tools that you run produce an output dataset, and when you chain many tools together, those datasets start piling up on disk. Esri has programmed ModelBuilder's default behavior such that when a model is run from a GUI interface, all datasets besides the final output -- referred to as intermediate data -- are automatically deleted. If, on the other hand, the model is run from ModelBuilder, intermediate datasets are left in their specified locations.

When running a model on another file system, specifying paths as we did above can be problematic, since the folder structure is not likely to be the same. This is where the concept of the scratch geodatabase (or scratch folder for file-based data like shapefiles) environment variable can come in handy. A scratch geodatabase is one that is guaranteed to exist on all ArcGIS installations. Unless the user has changed it, the scratch geodatabase will be found at C:\Users\<user>\Documents\ArcGIS\scratch.gdb on Windows 7/8. You can specify that a tool write to the scratch geodatabase by using the %scratchgdb% variable in the path. For example, %scratchgdb%\myOutput.

The following topics from Esri go into more detail on intermediate data and are important to understand as you work with the geoprocessing framework. I suggest reading them once now and returning to them occasionally throughout the course. Some of the concepts in them are easier to understand once you've worked with geoprocessing for a while.

- Intermediate data [3]

- Workspace environments [4]

- Scratch GDB [5]

Looping in ModelBuilder

Looping, or iteration, is the act of repeating a process. A main benefit of computers is their ability to quickly repeat tasks that would otherwise be mundane, cumbersome, or error-prone for a human to repeat and record. Looping is a key concept in computer programming, and you will use it often as you write Python scripts for this course.

ModelBuilder contains a number of elements called Iterators that can do looping in various ways. The names of these iterators, such as For and While actually mimic the types of looping that you can program in Python and other languages. In this course, we'll focus on learning iteration in Python, which may actually be just as easy as learning how to use a ModelBuilder iterator.

To take a peek at how iteration works in ModelBuilder, you can visit the ArcGIS Pro ModeBuilder help book for model iteration [6]. If you're having trouble understanding looping in later lessons, ModelBuilder might be a good environment to visualize what a loop does. You can come back and visit this book as needed.

Readings

Read Zandbergen Chapter 3.1 - 3.6, and 3.8 to reinforce what you learned about geoprocessing and ModelBuilder.

1.4.1 Introducing Python Using the Python Window in ArcGIS

The best way to introduce Python may be to look at a little bit of code. Let’s take the Buffer tool which you recently ran from the Geoprocessing pane and run it in the ArcGIS Python window. This window allows you to type a simple series of Python commands without writing full permanent scripts. The Python Window is a great way to get a taste of Python.

This time, we’ll make buffers of 15 miles around the cities.

- Open a new empty map in ArcGIS Pro.

- Add the us_cities.shp dataset from the Lesson 1 data.

- Under the View tab, click the Python window button

(you can also access this from the Analysis tab but there you have to make sure to use the drop-down arrow to open the Python window rather than a Python Notebook).

(you can also access this from the Analysis tab but there you have to make sure to use the drop-down arrow to open the Python window rather than a Python Notebook). -

Type the following in the Python window (Don't type the >>>. These are just included to show you where the new lines begin in the Python window.)

>>> import arcpy >>> arcpy.Buffer_analysis("us_cities", "us_cities_buffered", "15 miles", "", "", "ALL") -

Zoom in and confirm that the buffers were created.

You’ve just run your first bit of Python. You don’t have to understand everything about the code you wrote in this window, but here are a few important things to note.

The first line of the script -- import arcpy -- tells the Python interpreter (which was installed when you installed ArcGIS) that you’re going to work with some special scripting functions and tools included with ArcGIS. Without this line of code, Python knows nothing about ArcGIS, so you'll put it at the top of all ArcGIS-related code that you write in this class. You technically don't need this line when you work with the Python window in ArcMap because arcpy is already imported, but I wanted to show you this pattern early; you'll use it in all the scripts you write outside the Python window.

The second line of the script actually runs the tool. You can type arcpy, plus a dot, plus any tool name to run a tool in Python. Notice here that you also put an underscore followed by the name of the toolbox that includes the Buffer tool. This is necessary because some tools in different toolboxes actually have the same name (like Clip, which is a tool for clipping vectors in the Analysis toolbox or tool for clipping rasters in the Data Management toolbox).

After you typed arcpy.Buffer_analysis, you typed all the parameters for the tool. Each parameter was separated by a comma, and the whole list of parameters was enclosed in parentheses. Get used to this pattern, since you'll follow it with every tool you run in this course.

In this code, we also supplied some optional parameters, leaving empty quotes where we wanted to take the default values, and truncating the parameter list at the final optional parameter we wanted to set.

How do you know the syntax, or structure, of the parameters to enter? For example, for the buffer distance, should you enter 15MILES, ‘15MILES’, 15 Miles, or ’15 Miles’? The best way to answer questions like these is to return to the Geoprocessing tool reference help topic for the Buffer tool [7]. All of the topics in this reference section have Usage and Code Sample sections to help you understand how to structure the parameters. Optional parameters are enclosed in braces, while the required parameters are not. From the example in this topic, you can see that the buffer distance should be specified as ’15 miles’. Because there is a space in this text, or string, you need to surround it with single quotes.

You might have noticed that the Python window helps you by popping up different options you can type for each parameter. This is called autocompletion, and it can be very helpful if you're trying to run a tool for the first time, and you don't know exactly how to type the parameters. You might have also noticed that some code pops up like (Buffer() analysis and Buffer3D() 3d) when you were typing in the function name. You can use your up/down arrows to highlight the alternatives. If you selected Buffer() analysis, it will appear in your Python window.

Please note that if you do you use the code completion your code will sometimes look slightly different - esri have reorganised how the functions are arranged within arcpy, they work the same they're just in a slightly different place. The "old" way still works though so you might see inconsistencies in this class, online forums, esri's documentation etc. So, in this example, arcpy.Buffer_analysis(...) has changed to arcpy.analysis.Buffer(....) reflecting that the Buffer tool is located within the Analysis toolbox in Pro.

There are a couple of differences between writing code in the Python window and writing code in some other program, such as Notepad or PyScripter. In the Python window, you can reference layers in the map document by their names only, instead of their file paths. Thus, we were able to type "us_cities" instead of something like "C:\\data\\us_cities.shp". We were also able to make up the name of a new layer "us_cities_buffered" and get it added to the map by default after the code ran. If you're going to use your code outside the Python window, make sure you use the full paths.

When you write more complex scripts, it will be helpful to use an integrated development environment (IDE), meaning a program specifically designed to help you write and test Python code. Later in this course, we’ll explore the PyScripter IDE.

Earlier in this lesson, you saw how tools can be chained together to solve a problem using ModelBuilder. The same can be done in Python, but it’s going to take a little groundwork to get to that point. For this reason, we’ll spend the rest of Lesson 1 covering some of the basics of Python.

Readings

Zandbergen covers the Python window and some things you can do with it in Chapter 2.

2nd Edition: 2.7, and 2.9-2.13

3rd Edition: 2.8, and 2.10-2.14

1.4.2 What is Python?

Python is a language that is used to automate computing tasks through programs called scripts. In the introduction to this lesson, you learned that automation makes work easier, faster, and more accurate. This applies to GIS and many other areas of computer science. Learning Python will make you a more effective GIS analyst, but Python programming is a technical skill that can be beneficial to you even outside the field of GIS.

Python is a good language for beginning programming. Python is a high-level language, meaning you don’t have to understand the “nuts and bolts” of how computers work in order to use it. Python syntax (how the code statements are constructed) is relatively simple to read and understand. Finally, Python requires very little overhead to get a program up and running.

Python is an open-source language, and there is no fee to use it or deploy programs with it. Python can run on Windows, Linux, Unix, and Mac operating systems.

In ArcGIS, Python can be used for coarse-grained programming, meaning that you can use it to easily run geoprocessing tools such as the Buffer tool that we just worked with. You could code all the buffer logic yourself, using more detailed, fine-grained programming with the ArcGIS Pro SDK, but this would be time consuming and unnecessary in most scenarios; it’s easier just to call the Buffer tool from a Python script using one line of code.

In addition to the Esri help which describes all of the parameters of a function and how to access them from Python, you can also get Python syntax (the structure of the language) for a tool like this :

- Run the tool interactively (e.g., Buffer) from the Geoprocessing pane with your input data, output data and any other relevant parameters (e.g., distance to buffer).

- Beneath the tool parameters, you should see a notification of the completed running of the tool. Right-click on that notification. (Alternatively, you can click the History button under the Analysis tab to obtain a list of run tools.)

- Pick "Copy Python command."

- Paste the code into the Python code window or PyScripter (see next section) or to see how you would code the same operation you just ran in Pro in Python.

1.4.3 Installing PyScripter

PyScripter is an easy IDE to install for ArcGIS Pro development. If you are using ArcGIS Pro version 2.2 or newer, you will first have to create and activate a clone of the ArcGIS default Python environment (see here [8] for details on this issue). ArcGIS Pro 3.0 changed the way that Pro manages the environments and gives more control to the user. These steps below are written using Pro 3.1 as a reference. If you have an earlier version of Pro, these steps are similar in process but the output destination (step 4.1) will be set for you. To do this, follow these steps below and please let the instructor know if you run into any trouble.

- In the ArcGIS Pro Settings window (often the window that opens when you start Pro), click on Package Manager on the left side to open Pro's Python Package manager.

- Next to the Active Environment near the top right, click on the gear to open a window listing the Python environments.

- Find the environment named arcgispro-py3 (Default) and click on the symbol that looks like two pages or click on the ... to expand a menu to select clone.

- A window will pop up to allow you to name the new environment. The default destination path that Pro automatically sets is ok.

- The destination should be something like C:\Users\<username>\AppData\Local\ESRI\conda\envs\arcgispro-py3-clone. Make a note of this path on your pc because you will need it for PyScripter.

- Wait while it clones the environment.

- If the cloning fails with an error message saying that a Python package couldn't be installed, you may need to run ArcPro as an Administrator (do a right-click -> Run as administrator on the ArcGIS Pro icon) and repeat the steps above (it's also helpful to mouseover that error box in Pro and see if it gives you any additional details).

- When the cloning is done, the new environment "arcgispro-py3-clone" (or whatever you choose to call it - but we'll be assuming it's called the default name) can be activated by clicking on the ... and selecting Activate.

- You may need to restart Pro to get this change to take effect, and you may see a message telling you to restart. If not, then click the ok button to get back to Pro.

Now perform the following steps to install PyScripter:

- Download the installer from PyScripter's soureceforge download page [9], or you can download the install exe from the course files (64bit version [10] or 32bit version [11]). If you have problems with one version, you can try the other version. Let the Instructor know if you have any problems during the installation.

- Follow the steps to install it, checking the additional shortcuts if you want those features. Create a desktop shortcut does what it says it does. A Quick Launch shortcut is placed in the Task Bar, and the Add 'Edit' with PyScripter' to File Explorer context menu adds the option to use PyScripter in the menu that appears when you right click on a file.

- Click the Install button and follow the prompts. Check the Launch PyScripter box and click Finish.

- Once PyScripter opens, we need to point it to the cloned environment we made. To do this, find the Python 3.x (64-bit) on the bottom bar. Your Python might be different, and that is ok for now. Click on the Python 3.x text and it will open a window listing all of the Python environments it found. If your clone is not listed, you need to click on the Add a new Python version button (gear with a plus) and navigate to where it was created. You can get this path by referring to the Package Manager in Pro. Once you get to the parent folder of the environment, select it and it will add it to the list of Unregistered Versions.

- Double click on the environment to activate it. If you get an Abort Error, click ok to close the prompt.

- Verify that your environment is active. It will have a large arrow next to it. Close the Python Versions window. Note that the below image is pointing to the default ArcGIS Pro Python environment. You can use this environment, but adding packages to it has the potential to break Pro. It is recommended that you use a cloned environment.

Figure 1.21 PyScripter setting ArcGIS Pro conda Python environment

Figure 1.21 PyScripter setting ArcGIS Pro conda Python environment - It is a good idea to close the application and restart it to ensure that it saves your activated environment. If the settings revert back to the defaults, repeat the step 4 through 7 again and it should save.

Figure 1.22 PyScripter Installation

Figure 1.22 PyScripter Installation

If you are familiar with another IDE you're welcome to use it instead of PyScripter (just verify that it is using Python 3!) but we recommend that you still install PyScripter to be able to work through the following sections and the sections on debugging in Lesson 2.

1.4.4 Exploring PyScripter

Here’s a brief explanation of the main parts of PyScripter. Before you begin reading, be sure to have PyScripter open, so you can follow along.

When PyScripter opens, you’ll see a large text editor in the right side of the window. We'll come back to this part of the PyScripter interface in a moment. For now, focus on the pane in the bottom called the Python Interpreter. If this window is not open, and not listed as a tab along the bottom, you can open it by going to the top menu and selecting View> IDE Windows> then select Python Interpreter. This console is much like the Python interactive window we saw earlier in the lesson. You can type a line of Python at the In >>> prompt, and it will immediately execute and print the result if there is a printable result. This console can be a good place to practice with Python in this course, and whenever you see some Python code next to the In >>> prompt in the lesson materials, this means you can type it in the console to follow along.

We can experiment here by typing "import arcpy" to import arcpy or running a print statement.

>>> import arcpy

>>> print ("Hello World")

Hello world

You might have noticed while typing in that second example a useful function of the Python Interpreter - code completion. This is where PyScripter, like Pro's Python window, is smart enough to recognize that you're entering a function name, and it provides you with the information about the parameters that function takes. If you missed it the first time, enter print(in the IPython window and wait for a second (or less) and the print function's parameters will appear. This also works for arcpy functions (or those from any library that you import). Try it out with arcpy.Buffer_analysis.

Now let's return to the right side of the window, the Editor pane. It will contain a blank script file by default (module1.py). I say it's a blank script file, because while there is text in the file, that text is delimited by special characters that cause it to be ignored when the script is executed We'll discuss these special characters further later, but for now, it's sufficient to note that PyScripter automatically inserts the character encoding of the file, the time it was created, and the login name of the user running PyScripter. You can add the actual Python statements that you'd like to be executed beneath these bits of documentation. (You can also remove the function and if statement along with the documentation, if you like.)

Among the nice features of PyScripter's editor (and other Python IDEs) is its color coding of different Python language constructs. Spacing and indentation, which are important in Python, are also easy to keep track of in this interface. Lastly, note that the Editor pane is a tabbed environment; additional script files can be loaded using File > New or File > Open.

Above the Editor pane, a number of toolbars are visible by default. The File, Run, and Debug toolbars provide access to many commonly used operations through a set of buttons. The File toolbar contains tools for loading, running, and saving scripts. Finally, the Debug toolbar contains tools for carefully reviewing your code line-by-line to help you detect errors. The Debugging toolbar is extremely valuable to you as a programmer, and you’ll learn how to use it later in this course. This toolbar is one of the main reasons to use an Integrated Development Environment (IDE) instead of writing your code in a simple text editor like Notepad.

1.5.1 Working with variables

It’s time to get some practice with some beginning programming concepts that will help you write some simple scripts in Python by the end of Lesson 1. We’ll start by looking at variables.

Remember your first introductory algebra class where you learned that a letter could represent any number, like in the statement x + 3? This may have been your first exposure to variables. (Sorry if the memory is traumatic!) In computer science, variables represent values or objects you want the computer to store in its memory for use later in the program.

Variables are frequently used to represent not only numbers, but also text and “Boolean” values (‘true’ or ‘false’). A variable might be used to store input from the program’s user, to store values returned from another program, to represent constant values, and so on.

Variables make your code readable and flexible. If you hard-code your values, meaning that you always use the literal value, your code is useful only in one particular scenario. You could manually change the values in your code to fit a different scenario, but this is tedious and exposes you to a greater risk of making a mistake (suppose you forget to change a value). Variables, on the other hand, allow your code to be useful in many scenarios and are easy to parameterize, meaning you can let users change the values to whatever they need.

To see some variables in action, open PyScripter and type this in the Python Interpreter:

>>> x = 2

You’ve just created, or declared, a variable, x, and set its value to 2. In some strongly-typed programming languages, such as Java, you would be required to tell the program that you were creating a numerical variable, but Python assumes this when it sees the 2.

When you hit Enter, nothing happens, but the program now has this variable in memory. To prove this, type:

>>> x + 3

You see the answer of this mathematical expression, 5, appear immediately in the console, proving that your variable was remembered and used.

You can also use the print function to write the results of operations. We’ll use this a lot when practicing and testing code.

>>> print (x + 3) 5

Variables can also represent words, or strings, as they are referred to by programmers. Try typing this in the console:

>>> myTeam = "Nittany Lions" >>> print (myTeam) Nittany Lions

In this example, the quotation marks tell Python that you are declaring a string variable. Python is a powerful language for working with strings. A very simple example of string manipulation is to add, or concatenate, two strings, like this:

>>> string1 = "We are " >>> string2 = "Penn State!" >>> print (string1 + string2) We are Penn State!

You can include a number in a string variable by putting it in quotes, but you must thereafter treat it like a string; you cannot treat it like a number. For example, this results in an error:

>>> myValue = "3" >>> print (myValue + 2)

In these examples, you’ve seen the use of the = sign to assign the value of the variable. You can always reassign the variable. For example:

>>> x = 5 >>> x = x - 2 >>> print (x) 3

When naming your variables, the following tips will help you avoid errors.

- Variable names are case-sensitive. myVariable is a different variable than MyVariable.

- Variable names cannot contain spaces.

- Variable names cannot begin with a number.

- A recommended practice for Python variables is to name the variable beginning with a lower-case letter, then begin each subsequent word with a capital letter. This is sometimes known as camel casing. For example: myVariable, mySecondVariable, roadsTable, bufferField1, etc.

- Variables cannot be any of the special Python reserved words such as "import" or "print."

Make variable names meaningful so that others can easily read your code. This will also help you read your code and avoid making mistakes.

You’ll get plenty of experience working with variables throughout this course and will learn more in future lessons.

Readings

Read Zandbergen section 4.5 on variables and naming.

- Chapter 4 covers the basics of Python syntax, loops, strings and other things which we will look at in more detail in Lesson 2, but feel free to read ahead a little now if you have time or come back to it at the end of Lesson 1 to prepare for Lesson 2.

- You'll note that the section on variable naming refers to a Python style guide, which recommends against the use of camel casing. As the text notes, camel casing is a popular naming scheme for a lot of developers, and you'll see it used throughout our course materials. If you're a novice developer working in an environment with other developers, we recommend that you find out how variable naming is done by your colleagues and get in the habit of following their lead.

1.5.2 Objects and object-oriented programming

The number and string variables that we worked with above represent data types that are built into Python. Variables can also represent other things, such as GIS datasets, tables, rows, and the geoprocessor that we saw earlier that can run tools. All of these things are objects that you use when you work with ArcGIS in Python.

In Python, everything is an object. All objects have:

- a unique ID, or location in the computer’s memory;

- a set of properties that describe the object;

- a set of methods, or things, that the object can do.

One way to understand objects is to compare performing an operation in a procedural language (like FORTRAN) to performing the same operation in an object-oriented language. We'll pretend that we are writing a program to make a peanut butter and jelly sandwich. If we were to write the program in a procedural language, it would flow something like this:

- Go to the refrigerator and get the jelly and bread.

- Go to the cupboard and get the peanut butter.

- Take out two slices of bread.

- Open the jars.

- Get a knife.

- Put some peanut butter on the knife.

- etc.

- etc.

If we were to write the program in an object-oriented language, it might look like this:

- mySandwich = Sandwich.Make

- mySandwich.Bread = Wheat

- mySandwich.Add(PeanutButter)

- mySandwich.Add(Jelly)

In the object-oriented example, the bulk of the steps have been eliminated. The sandwich object "knows how" to build itself, given just a few pieces of information. This is an important feature of object-oriented languages known as encapsulation.

Notice that you can define the properties of the sandwich (like the bread type) and perform methods (remember that these are actions) on the sandwich, such as adding the peanut butter and jelly.

1.5.3 Classes

The reason it’s so easy to "make a sandwich" in an object-oriented language is that some programmer, somewhere, already did the work to define what a sandwich is and what you can do with it. He or she did this using a class. A class defines how to create an object, the properties and methods available to that object, how the properties are set and used, and what each method does.

A class may be thought of as a blueprint for creating objects. The blueprint determines what properties and methods an object of that class will have. A common analogy is that of a car factory. A car factory produces thousands of cars of the same model that are all built on the same basic blueprint. In the same way, a class produces objects that have the same predefined properties and methods.

In Python, classes are grouped together into modules. You import modules into your code to tell your program what objects you’ll be working with. You can write modules yourself, but most likely you'll bring them in from other parties or software packages. For example, the first line of most scripts you write in this course will be:

import arcpy

Here, you're using the import keyword to tell your script that you’ll be working with the arcpy module, which is provided as part of ArcGIS. After importing this module, you can create objects that leverage ArcGIS in your scripts.

Other modules that you may import in this course are os (allows you to work with the operating system), random (allows for generation of random numbers), csv (allows for reading and writing of spreadsheet files in comma-separated value format), and math (allows you to work with advanced math operations). These modules are included with Python, but they aren't imported by default. A best practice for keeping your scripts fast is to import only the modules that you need for that particular script. For example, although it might not cause any errors in your script, you wouldn't include import arcpy in a script not requiring any ArcGIS functions.

Readings

Read Zandbergen section 5.9 (Classes) for more information about classes.

1.5.4 Inheritance

Another important feature of object-oriented languages is inheritance. Classes are arranged in a hierarchical relationship, such that each class inherits its properties and methods from the class above it in the hierarchy (its parent class or superclass). A class also passes along its properties and methods to the class below it (its child class or subclass). A real-world analogy involves the classification of animal species. As a species, we have many characteristics that are unique to humans. However, we also inherit many characteristics from classes higher in the class hierarchy. We have some characteristics as a result of being vertebrates. We have other characteristics as a result of being mammals. To illustrate the point, think of the ability of humans to run. Our bodies respond to our command to run not because we belong to the "human" class, but because we inherit that trait from some class higher in the class hierarchy.

Back in the programming context, the lesson to be learned is that it pays to know where a class fits into the class hierarchy. Without that piece of information, you will be unaware of all of the operations available to you. This information about inheritance can often be found in informational posters called object model diagrams.

Here's an example of an object model diagram for the ArcGIS Python Geoprocessor at 10.x [12]. Take a look at the green(ish) box titled FeatureClass Properties and notice at the middle column, second from the top, it says Dataset Properties. This is because FeatureClass inherits all properties from Dataset. Therefore, any properties on a Dataset object, such as Extent or SpatialReference, can also be obtained if you create a FeatureClass object. Apart from all the properties it inherits from Dataset, the FeatureClass has its own specialized properties such as FeatureType and ShapeType (in the top box in the left column).

1.5.5 Python syntax

Every programming language has rules about capitalization, white space, how to set apart lines of code and procedures, and so on. Here are some basic syntax rules to remember for Python:

- Python is case-sensitive, both in variable names and reserved words. That means it’s important whether you use upper or lower-case. The all lower-case "print" is a reserved word in Python that will print a value, while "Print" is unrecognized by Python and will return an error. Likewise, arcpy is very sensitive about case and will return an error if you try to run a tool without capitalizing the tool name.

- You end a Python statement by pressing Enter and literally beginning a new line. (In some other languages, a special character, such as a semicolon, denotes the end of a statement.) It’s okay to add empty lines to divide your code into logical sections.

- If you have a long statement that you want to display on multiple lines for readability, you need to use a line continuation character, which in Python is a backslash (\).

- Indentation is required in Python to logically group together certain lines, or blocks, of code. You should indent your code four spaces inside loops, if/then statements, and try/except statements. In most programming languages, developers are encouraged to use indentation to logically group together blocks of code; however, in Python, indentation of these language constructs is not only encouraged, but required. Though this requirement may seem burdensome, it does result in greater readability.

- You can add a comment to your code by beginning the line with a # character. Comments are lines that you include to explain what the code is doing. Comments are ignored by Python when it runs the script, so you can add them at any place in your code without worrying about their effect. Comments help others who may have to work with your code in the future, and they may even help you remember what the code does. The intended audience for comments is developers.

- You can add documentation to your code by surrounding a block of text with """ (3 consecutive double quotes) on either side). As with the # character, text surrounded by """ is ignored when Python runs the script. Unlike comments, which are sprinkled throughout scripts, documentation strings are generally found at the beginning of scripts. Their intended audience is end users of the script (non-developers).

1.6.1 Introductory Python examples

Let’s look at a few example scripts to see how these rules are applied. The first example script is accompanied with a walkthrough video that explains what happens in each line of the code. You can also review the main points about each script after reading the code.

1.6.2 Example: Printing the spatial reference of a feature class

This first example script reports the spatial reference (coordinate system) of a feature class stored in a geodatabase. If you want to use the USA.gdb referenced in this example, you can run the code [13] yourself.

1 2 3 4 5 6 7 8 9 10 11 12 | # Opens a feature class from a geodatabase and prints the spatial referenceimport arcpyfeatureClass = "C:/Data/USA/USA.gdb/Boundaries"# Describe the feature class and get its spatial reference desc = arcpy.Describe(featureClass)spatialRef = desc.spatialReference# Print the spatial reference nameprint (spatialRef.Name) |

This may look intimidating at first, so let’s go through what’s happening in this script, line by line. Watch this video (5:54) to get a visual walkthrough of the code.

Video: Walk-Through of First Python Script (5:33)

Again, notice that:

- a comment begins the script to explain what’s going to happen.

- case sensitivity is applied in the code. "import" is all lower-case. So is "print". The module name "arcpy" is always referred to as "arcpy," not "ARCPY" or "Arcpy". Similarly, "Describe" is capitalized in arcpy.Describe.

- The variable names featureClass, desc, and spatialRef that the programmer assigned are short, but intuitive. By looking at the variable name, you can quickly guess what it represents.

- The script creates objects and uses a combination of properties and methods on those objects to get the job done. That’s how object-oriented programming works.

Trying the example for yourself

The best way to get familiar with a new programming language is to look at example code and practice with it yourself. See if you can modify the script above to report the spatial reference of a feature class on your computer. In my example, the feature class is in a file geodatabase; you’ll need to modify the structure of the featureClass path if you are using a shapefile (for example, you'll put .shp at the end of the file name, and you won't have .gdb in your path).

Follow this pattern to try the example:

- Open PyScripter and click File > New.

- Paste the code above into the new script file and modify it to fit your data (change the path).

- Save your script as a .py file.

- Click the Run button to run the script. Look at the IPython console to see the output from the print keyword. The print keyword does not actually cause a hard copy to be printed! Note that the script will take several seconds to run the first time because of the need to import the arcpy module and its dependencies. Subsequent runs of arcpy-dependent scripts during your current PyScripter session will not suffer from this lag.

Readings

We'll take a short break and do some reading from another source. If you are new to Python scripting, it can be helpful to see the concepts from another point of view.

Read parts of Zandbergen chapters 4 & 5. This will be a valuable introduction to Python in ArcGIS, on how to work with tools and toolboxes (very useful for Project 1), and also on some concepts which we'll revisit later in Lesson 2 (don't worry if the bits we skip over seem daunting - we'll explain those in Lesson 2).

- Chapter 4 deals with the fundamentals of Python. We will need a few of these to get started, and we'll revisit this chapter in Lesson 2. For now, read sections 4.1-4.7, reviewing 4.5, which you read earlier.

- Chapter 5 talks about working with arcpy and functions - read sections 5.1-5.2 and 5.4-5.7.

1.6.3 Example: Performing map algebra on a raster

Here’s another simple script that finds all cells over 3500 meters in an elevation raster and makes a new raster that codes all those cells as 1. Remaining values in the new raster are coded as 0. This type of “map algebra” operation is common in site selection and other GIS scenarios.

Something you may not recognize below is the expression Raster(inRaster). This function just tells ArcGIS that it needs to treat your inRaster variable as a raster dataset so that you can perform map algebra on it. If you didn't do this, the script would treat inRaster as just a literal string of characters (the path) instead of a raster dataset.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | # This script uses map algebra to find values in an# elevation raster greater than 3500 (meters).import arcpyfrom arcpy.sa import *# Specify the input rasterinRaster = "C:/Data/Elevation/foxlake"cutoffElevation = 3500# Check out the Spatial Analyst extensionarcpy.CheckOutExtension("Spatial")# Make a map algebra expression and save the resulting rasteroutRaster = Raster(inRaster) > cutoffElevationoutRaster.save("C:/Data/Elevation/foxlake_hi_10")# Check in the Spatial Analyst extension now that you're donearcpy.CheckInExtension("Spatial") |

Begin by examining this script and trying to figure out as much as you can based on what you remember from the previous scripts you’ve seen.

The main points to remember on this script are: