Lesson 3: The Framework

The links below provide an outline of the material for this lesson. Be sure to carefully read through the entire lesson before returning to Canvas to submit your assignments.

Lesson 3 Overview

Overview

The Department of Defense found nearly 120 different types of positioning systems in place in the 1970s. They were all limited in their application and local in their scope. Consolidation was called for. NAVSTAR GPS (NAVigation System with Timing And Ranging, Global Positioning System) was proposed. The new system was built on the strengths and avoided the weaknesses of its forerunners.

In this lesson, we will take a look at the earlier systems and their technological contributions toward the development of GPS. This is not history for its own sake. It is an effort to explain the reasons behind the functioning of the GPS system today, built as it is on lessons learned from experience.

Objectives

At the successful completion of this lesson, students should be able to:

- discuss technological forerunners of GPS;

- recognize terrestrial radio positioning, optical systems, and extraterrestrial radio positioning;

- explain the role of TRANSIT in GPS development;

- explain NAVSTAR;

- describe GPS Segment Organization; and

- differentiate between the roles of the Space Segment, the Control Segment, and the User Segment.

Questions?

If you have any questions now or at any point during this week, please feel free to post them to the Lesson 3 Discussion Forum. (To access the forum, return to Canvas and navigate to the Lesson 3 Discussion Forum in the Lesson 3 module.) While you are there, feel free to post your own responses if you, too, are able to help out a classmate.

Checklist

Lesson 3 is one week in length. (See the Calendar in Canvas for specific due dates.) To finish this lesson, you must complete the activities listed below. You may find it useful to print this page out first so that you can follow along with the directions.

| Step | Activity | Access/Directions |

|---|---|---|

| 1 | Read the lesson Overview and Checklist. | You are in the Lesson 3 online content now. The Overview page is previous to this page, and you are on the Checklist page right now. |

| 2 | Read Chapter 3 in GPS for Land Surveyors. | Text |

| 3 | Read the lecture material for this lesson. | You are currently on the Checklist page. Click on the links at the bottom of the page to continue to the next page, to return to the previous page, or to go to the top of the lesson. You can also navigate the lecture material via the links in the Lessons menu. |

| 4 | Participate in the Discussion. | To participate in the discussion, please go to the Lesson 3 Discussion Forum in Canvas. (That forum can be accessed at any time by going to the GEOG 862 course in Canvas and then looking inside the Lesson 3 module.) |

| 5 | Take the Lesson 3 Quiz. (This quiz will cover Lessons 1, 2, and 3.) | The Lesson 3 Quiz is located in the Lesson 3 module in Canvas. |

| 6 | Read lesson Summary. | You are in the Lesson 3 online content now. Click on the "Next Page" link to access the Summary. |

Technological Forerunners

In the early 1970s, the Department of Defense, DOD, commissioned a study to define its future positioning needs. That study found nearly 120 different types of positioning systems in place, all limited by their special and localized requirements. They were of various kinds—some were terrestrial, some relied on electromagnetic signals, some were optical. There were various kinds of positioning systems in place. A plan was made to combine the systems—to integrate them. In general, the idea always was to take from the previous systems the aspects that worked the best and to leave behind those that left something to be desired. The study called for consolidation, and NAVSTAR GPS (NAVigation System with Timing And Ranging, Global Positioning System) was proposed. Specifications for the new system were developed to build on the strengths and avoid the weaknesses of its forerunners. What follows is a brief look at the earlier systems and their technological contributions toward the development of GPS.

Terrestrial Radio Positioning

As mentioned, many of the systems were terrestrial. And, of course, RADAR (RAdio Detecting And Ranging) in its earliest configuration was one of those. During World War II, it was a powerful tool. Long before the satellite era, the developers of RADAR were working out many of the concepts and terms still used in electronic positioning today. For example, the classification of the radio portion of the electromagnetic spectrum by letters, such as the L-band used in naming the GPS carriers, was introduced during World War II to maintain military secrecy about the new technology.

Electromagnetic Spectrum

Above is a chart of a portion of the electromagnetic spectrum. Visible light is a very small range in the middle. Microwaves—which is the area where the RADAR bands live—is considerably outside the visible range.

The 23cm wavelength that was originally used for search radar was given the L designation because it was long compared to the shorter 10cm wavelengths introduced later. The L band wavelengths are 15 to 30 centimeters. So, when we say L band, we're referring to this identification system. The GPS carriers with wavelengths in the neighborhood of 20 centimeters fit well within that context. The shorter wavelength was called S-band, the S for short. The S band—s for short—8 to 15 centimeters. But the Germans used even shorter wavelengths of 1.5cm. They were called K-band, for kurtz, meaning short in German. Wavelengths that were neither long nor short were given the letter C, for compromise, and P-band, for previous, was used to refer to the very first meter-length wavelengths used in RADAR. There is also an X-band radar used in fire-control radars and other applications. In any case, the concept of measuring distance with electromagnetic signals (ranging in GPS) had one of its earliest practical applications in RADAR. Since then, there have been several incarnations of the idea.

Shoran station

Shoran (SHOrt RAnge Navigation), a method of electronic ranging using pulsed 300 MHz VHF (very-high-frequency) signals, was designed for bomber navigation, but was later adapted to more benign uses. The system depended on a signal, sent by a mobile transmitter-receiver-indicator unit being returned to it by a fixed transponder. The elapsed time of the round trip was then converted into distances. It was not long before the method was adapted for use in surveying. Using Shoran from 1949 to 1957, Canadian geodesists were able to achieve precisions as high as 1:56,000 on lines of several hundred kilometers. The ability to measure distances of this magnitude was extraordinary in those times. Shoran was used in hydrographic surveys in 1945 by the United States Coast and Geodetic Survey. In 1951, Shoran was used to locate islands off Alaska in the Bering Sea that were beyond positioning by visual means. Also in the early 1950s, the U.S. Air Force created a Shoran measured trilateration net between Florida and Puerto Rico that was continued on to Trinidad and South America. Trilateration is a familiar term now, as it has come up again in GPS—positioning based upon distances. However, it's worthwhile to note that Shoran and the related technologies, Loran and Hiran, are terrestrial based.

Shoran’s success led to the development of Hiran (high-precision Shoran). Hiran’s pulsed signal was more focused, its amplitude more precise, and its phase measurements more accurate. Hiran was also applied to geodesy. It was used to make the first connection between Africa, Crete, and Rhodes in 1943. But its most spectacular application were the arcs of triangulation joining the North American Datum (1927) with the European Datum (1950) in the early 1950s. By knitting together continental datums, Hiran surveying might be considered to be the first practical step toward positioning on a truly global scale.

Hiran surveying

Hiran surveying was really the first intercontinental system. As you can see in this graphic, it was used to connect datums across the oceans. This had never been done before. It was especially remarkable given that this was a terrestrial system. Up until we started thinking in global terms, there was really no reason to consider that a worldwide foundation of measurement was needed. Today, it is usual to think of the whole planet, and we expect measurements to be consistent around the Earth. However, before intercontinental connections were made, that idea wasn't necessary or taken seriously. So, one of the forerunners of GPS is simply the mindset of thinking in terms of a global datum (reference frame).

Satellites

These and other radio navigation systems proved that ranges derived from accurate timing of electromagnetic radiation were viable. But useful as they were in geodesy and air-navigation, they only whet the appetite for a higher platform. In 1957, the development of Sputnik, the first earth-orbiting satellite, made that possible.

Some of the benefits of earth-orbiting satellites were immediately apparent. It was clear that the potential coverage was virtually unlimited. But other advantages were less obvious.

Sputnik didn't really do anything but send a pulse that was identifiable by any shortwave enthusiast around the world. Nevertheless, its success led to a great awakening to the possibilities of orbiting artificial satellites. It was obvious that a platform that high, broadcasting electromagnetic signals, would be able to cover the entire planet much more effectively than any terrestrial system, even Hiran, could manage. In fact, there were other aspects of the Sputnik signal we're still using today in GPS.

Satellite advantages

It was clear that the potential coverage was virtually unlimited. The coverage of a terrestrial radio navigation system is limited by the propagation characteristics of electromagnetic radiation near the ground. To achieve long ranges, the basically spherical shape of the earth favors low frequencies that stay close to the surface. You've undoubtedly heard of the bouncing of radio waves off the ionosphere, for example. One such terrestrial radio navigation system, LORAN-C ( LOng-RANge navigation-C), was used to determine speeds and positions of receivers up to 3000 km from fixed transmitters. Unfortunately, its frequency had to be in the LF (Low-Frequency range) from 90 to 110 kHz. Many nations besides the United States used LORAN including Japan, Canada, and several European countries. Russia has a similar system called Chayka. In any case, LORAN was phased out in the United States and Canada in 2010, though eLORAN (enhanced LORAN) continues to be considered. These low frequency systems were used to do positioning. But were not intended to be at the level of accuracy of GPS.

Omega, another low frequency hyperbolic radio navigation system, was operated from 1968 to September 30, 1997 by the United States Coast Guard. Omega was used by other countries as well. It was capable of ranges of 9000 km. Its 10- to 14-kHz frequency was so low as to be audible (the range of human hearing is about 20 Hz to 20 kHz). Such low frequencies can be profoundly affected by unpredictable ionospheric disturbances and ground conductivity, making modeling the reduced propagation velocity of a radio signal over land difficult. However, higher frequencies require line of sight.

Line of sight is no problem for earth-orbiting satellites, of course. Signals from space overcome many of the low frequency limitations, allow the use of a broader range of frequencies, and are simply more reliable. Using satellites, one could achieve virtually limitless coverage. Earth-orbiting satellites allow the use of high frequencies. They can cover the entire planet. But development of the technology for launching transmitters with sophisticated frequency standards into orbit was not accomplished immediately. Launching such transmitters, frequency standards, oscillators, etc., into orbit was a pretty tall order in 1957 when Sputnik went up. Therefore, some of the earliest extraterrestrial positioning was done with optical systems.

Optical Systems

Some of the earliest extraterrestrial positioning was done with optical systems. Optical tracking of satellites is a logical extension of astronomy. The astronomic determination of a position on the earth’s surface from star observations, certainly the oldest method, is actually very similar to extrapolating the position of a satellite from a photograph of it crossing the night sky. In fact, the astronomical coordinates, right ascension α and declination δ, of such a satellite image are calculated from the background of fixed stars.

Here's a picture of a track of the International Space Station against the so-called fixed stars. Of course, it is possible to determine the position of a satellite in this way because the stars have known coordinates in the right ascension and declination system. And so, if one knows the time and knows the stars in the background, it is quite possible to learn the ephemeris—or the position—of the satellite based upon that control. This is an extension of astronomy that has been used—and to some extent continues to be used—in terms of positioning the satellites. For example, it's worth noting that some of the major remote sensing satellites have star trackers on board that help determine their orientation and position.

Photographic images that combine reflective satellites and fixed stars are taken with ballistic cameras whose chopping shutters open and close very fast. The technique causes both the satellites, illuminated by sunlight or earth-based beacons, and the fixed stars to appear on the plate as a series of dots. Comparative analysis of photographs provides data to calculate the orbit of the satellite. Photographs of the same satellite made by cameras thousands of kilometers apart can thus be used to determine the camera’s positions by triangulation. The accuracy of such networks has been estimated as high as ±5 meters.

Of course, by implication, if one knows the position of a satellite relative to a sensor on the Earth's surface, then, obviously, it is possible to extrapolate the position of the sensor on the Earth's surface. The result is a positioning system that uses extraterrestrial objects to determine positions on the Earth's surface. The idea of using satellites to get positions on the Earth has a history of many decades.

Other optical systems are much more accurate. One called SLR (Satellite Laser Ranging) is similar to measuring the distance to a satellite using a sophisticated EDM. A laser aimed from the earth to satellites equipped with retro reflectors yields the range. It is instructive that all new GNSS satellites, except GPS, are equipped with lasers onboard corner cube reflectors (aka Laser Retroreflector Arrays, LRAs) for exactly this purpose. The GPS space vehicles numbered SVN 35 (PRN 05) and SVN 36 (PRN 06) were equipped with LRAs, thereby allowing ground stations to separate the effect of errors attributable to satellite clocks from errors in the satellite’s ephemerides. Neither of them is still in service, but the plan is to have LRAs on all upcoming GPS III satellites.

The same technique, called LLR (Lunar Laser Ranging), is used to measure distances to the moon using corner cube reflector arrays left there during manned missions. There are four available arrays - three of them set during Apollo missions and one during the Soviet Lunokhod 2 mission. These techniques can achieve positions of centimeter precision when information is gathered from several stations. However, one drawback is that the observations must be spread over long periods, up to a month, and they, of course, depend on two-way measurement.

We discussed EDMs earlier, and you know that it is possible to use EDMs as an optical system to determine distances. Satellite Laser Ranging, SLR, can measure distances using an electronic distance measuring device, an EDM, and a laser that points from the Earth to the satellite. The reflectors on the satellite can return that signal to the terrestrial sensor. This a method of measurement by phase comparison with a reference wave at the EDM. It works well for satellites orbiting. This system is also used for Lunar Laser Ranging (LLR). There are banks of reflecting retro prisms on the lunar surface that were left by the manned missions, both Russian and American. We can now determine the position of the moon relative to the Earth using electronic distance measuring to centimeter precision. This is useful for many positioning needs.

Optical drawbacks

While some optical methods, like SLR, can achieve extraordinary accuracies, they can at the same time be subject to chronic difficulties. Some methods require skies to be clear at widely spaced sites simultaneously. Even then, the troposphere and the ionosphere can be very uncooperative. And local atmospheric turbulence can cause the images of the satellites to scintillate. The necessity of broadcasting a signal revealing your position, the need to have expensive—and sometimes bulky—equipment and the difficulty of modeling optical refraction are some of the drawbacks to the optical method. Still, it remains a significant part of the satellite management programs of NASA and other agencies.

Extraterrestrial Systems

The earliest American extraterrestrial systems were designed to assist in satellite tracking and satellite orbit determination, not geodesy. Some of the methods used did not find their way into the GPS technology at all. Some early systems relied on the reflection of signals, transmissions from ground stations, that would either bounce off the satellite or stimulate onboard transponders. But systems that required the user to broadcast a signal from the earth to the satellite were not favorably considered in designing the then-new GPS system. Any requirement that the user reveal his position was not attractive to the military planners responsible for developing GPS. They favored a passive system that allowed the user to simply receive the satellite’s signal. So, with their two-way measurements and utilization of several frequencies to resolve the cycle ambiguity, many early extraterrestrial tracking systems were harbingers of the modern EDM technology more than GPS.

But, elsewhere, there were ranging techniques useful to GPS. NASA's first satellite tracking system, Prime Minitrack, relied on phase difference measurements of a single-frequency carrier broadcast by the satellites themselves and received by two separate ground-based antennas. This technique is called interferometry. Interferometry is the measurement of the difference between the phases of signals that originate at a common source but travel different paths to the receivers. The combination of such signals, collected by two separate receivers, invariably finds them out of step (out of phase) since one has traveled a longer distance than the other. Analysis of the signal’s phase difference can yield very accurate ranges, and interferometry has become an indispensable measurement technique in several scientific fields. Interferometry was used in the first commercial GPS receiver, called a Macrometer.

VLBI

For example, Very Long Baseline Interferometry (VLBI) did not originate in the field of satellite tracking or aircraft navigation, but in radio astronomy. The technique was so successful it is still in use today. Radio telescopes, sometimes on different continents, tape-record the microwave signals from quasars, star-like points of light billions of light-years from earth. A quasar is a very consistent radio source in the sky, and tracking these quasars from radio telescopes can be used to measure extraordinary distances very accurately using interferometry. The technique is used today in the network of control points around the globe for monitoring tectonic movements which is one of the technologies at the foundation of the International Terrestrial Reference Frame (ITRF), which we will talk about later.

In any case, these recordings are encoded with time tags controlled by hydrogen masers, the most stable of oscillators (clocks). The tapes are then brought together and played back at a central processor. Cross-correlation of the time tags reveals the difference in the instants of wavefront arrivals at the two telescopes. The discovery of the time offset that maximizes the correlation of the signals recorded by the two telescopes yields the distance and direction between them within a few centimeters, over thousands of kilometers.

VLBI’s potential for geodetic measurement was realized as early as 1967. But the concept of high-accuracy baseline determination using phase differencing was really proven in the late 1970s. A direct line of development leads from the VLBI work of that era by a group from the Massachusetts Institute of Technology to today’s most accurate GPS ranging technique, carrier phase measurement. VLBI, along with other extraterrestrial systems like SLR, also provides valuable information on the earth’s gravitational field and rotational axis. It has become possible through extraterrestrial means to combine the datums of different continents, the shape of the Earth and the orientation of the Earth. Without that data, the high accuracy of the modern coordinate systems that are critical to the success of GPS, like the Conventional Terrestrial System (CT), would not be possible. But the foundation for routine satellite-based geodesy actually came even earlier and from a completely different direction. The first prototype satellite of the immediate precursor of the GPS system that was successfully launched reached orbit on June 29, 1961. Its range measurements were based on the Doppler Effect, not phase differencing, and the system came to be known as TRANSIT, or more formally the Navy Navigational Satellite System.

Satellite Positioning

Satellite technology and the Doppler Effect were combined in the first comprehensive earth-orbiting satellite system dedicated to positioning. By tracking Sputnik in 1957, experimenters at Johns Hopkins University’s Applied Physics Laboratory found that the Doppler shift of its signal provided enough information to determine the exact moment of its closest approach to the earth. This discovery led to the creation of the Navy Navigational Satellite System (NNSS or NAVSAT) and the subsequent launch of 6 satellites specifically designed to be used for navigation of military aircraft and ships. This same system, eventually known as TRANSIT, was classified in 1964, declassified in 1967, and was widely used in civilian hydrographic and geodetic surveying for many years until it was switched off on December 31, 1996.

The Doppler shift is characterized by the observation that when the source of a constant signal is approaching, its constituent wavefronts are compressed closer together. As that signal source moves away, the wavefronts are pulled further apart. This is often illustrated by the change in pitch of a railroad horn. As the train approaches, the pitch seems to rise, and as it moves away, the pitch seems to fall. The same effect is obvious with the changes in frequency of the signal from a satellite as it passes. As the satellite approaches, the observer wavefronts are pushed together, and as it moves away, they're pulled further apart. The rate at which this happens can be analyzed to determine the position of the observer in a passive way.

TRANSIT shortcomings

The TRANSIT system had some nagging drawbacks. For example, its primary observable was based on the comparison of the nominally constant frequency generated in the receiver with the Doppler-shifted signal received from 1 satellite at a time. With a constellation of only 6 satellites, this strategy sometimes left the observer waiting up to 90 minutes between satellites, and at least two passes were usually required for acceptable accuracy. With an orbit of only about 1100 km above the earth, the TRANSIT satellite’s orbits were quite low and, therefore, unusually susceptible to atmospheric drag and gravitational variations, making the calculation of accurate orbital parameters particularly difficult. Through the decades of the 1970s and 1980s, both the best and the worst aspects of the forerunner system informed GPS development.

In the end many strategies used in TRANSIT were incorporated into GPS. For example, in the TRANSIT system, the satellites broadcast their own ephemerides to the receivers and the receivers had their own frequency standards. TRANSIT had three segments: the control segment, including the tracking and upload facilities; the space segment, meaning the satellites themselves; and the user segment, everyone with receivers. The TRANSIT system satellites broadcast two frequencies of 400 MHz and 150 MHz to allow compensation for the ionospheric dispersion. TRANSIT’s primary observable was based on the Doppler Effect. All were used in GPS.

Perhaps one of the most significant advantages of the TRANSIT system over previous extraterrestrial systems was TRANSIT’s capability of linking national and international datums with relative ease. Its facility at strengthening geodetic coordinates laid the groundwork for modern geocentric datums. In 1963, at about the same time the Navy was using TRANSIT, the Air Force funded the development of a three-dimensional radio navigation system for aircraft. It was called System 621B. The fact that it provided a determination of the third dimension, altitude, was an improvement of some previous navigation systems. It relied on the user measuring ranges to satellites based on the time of arrival of the transmitted radio signals. With the instantaneous positions of the satellites known, the user’s position could be derived. The 621B program also utilized carefully design binary codes known as PRN codes or pseudorandom noise. Even though the PRN codes appeared to be noise at first, they were actually capable of repetition and replication. This approach also allowed all of the satellites to broadcast on the same frequency. Sounds rather familiar now, doesn’t it? Unfortunately, System 621B required signals from the ground to operate.

Another project, this one from the Navy had a name that conflated time and navigation, Timation. It began in 1964. The Timation 1 satellite was launched in 1967; it was followed by Timation 2 in 1969. Both of these satellites were equipped with high performance quartz crystal oscillators also known as XO. The daily error of these clocks was about 1 microsecond, which translates to about 300 m of ranging error. The technique they used to transmit ranging signals was called side-tone ranging. There was a great improvement in the clocks of Timation 3 which was launched in 1974. It was the first satellite outfitted with two rubidium clocks. Being able to have space-worthy Atomic Frequency Standards, AFS, on orbit was a big step toward accurate satellite positioning, navigation and timing (PNT). With this development, the Timation program demonstrated that a passive system using atomic clocks could facilitate worldwide navigation. The terms clock, oscillator, and frequency standard will be used interchangeably here.

It was the combination of atomic clock technology, the ephemeris system from TRANSIT, and the PRN signal design from the 621B program in 1973 that eventually became GPS. There was a Department of Defense directive to the United States Air Force in April 1973 that stipulated the consolidation of TIMATION and 621B into the navigation system called GPS. In fact, Timation 3 became part of the NAVSTAR–GPS program and was renamed Navigation Technology Satellite 1 (NTS-1). The next satellite in line, Timation 4 was known as NTS-2. Its onboard cesium clock had a frequency stability of 2 parts per 1013. It was launched in 1977. Unfortunately, it only operated for 8 months.

NAVSTAR GPS

GPS improved on some of the shortcomings of the previous systems. For example, the GPS satellites were placed in nearly circular orbits over 20,000 km above the earth where the consequences of gravity and atmospheric drag are much less severe than the lower orbits assigned to TRANSIT, Timation, and some of the other earlier systems used. GPS satellites broadcast higher frequency signals, which reduce the ionospheric delay. The rubidium and cesium clocks pioneered in the Timation program and built into GPS satellites were a marked improvement over the quartz oscillators that were used in TRANSIT and other early satellite navigation systems. System 621B could achieve positional accuracies of approximately 16 meters. TRANSIT’s shortcomings restricted the practical accuracy of the system, too. It could achieve submeter work, but only after long occupations on a station (at least a day) augmented by the use of precise ephemerides for the satellites in post-processing. GPS provides much more accurate positions in a much shorter time than any of its predecessors, but these improvements were only accomplished by standing on the shoulders of the technologies that have gone before.

Requirements

The genesis of GPS was military. It grew out of the congressional mandate issued to the Departments of Defense and Transportation to consolidate the myriad of navigation systems. Its application to civilian surveying was not part of the original design. In 1973, the DOD directed the Joint Program Office (JPO) in Los Angeles to establish the GPS system. Specifically, JPO was asked to create a system with high accuracy and continuous availability in real time that could provide virtually instantaneous positions to both stationary and moving receivers in three dimensions. The forerunners could not supply all these features. The challenge was to bring them all together in one system.

Secure, Passive, and Global

Worldwide coverage and positioning on a common coordinate grid were required of the new system—a combination that had been difficult, if not impossible, with terrestrial-based systems. It was to be a passive system, which ruled out any transmissions from the users, as had been tried with some previous satellite systems. The user could not be required to reveal their position when they used the system, so obviously broadcasting a signal was out of the question. The signal was also to be secure and resistant to jamming, so codes in the satellite’s broadcasts would need to be complex and the GPS carriers spread-spectrum (wide).

Spread Spectrum Signal

The specification for the GPS system required all-weather performance and correction for ionospheric propagation delay. TRANSIT had shown what could be accomplished with a dual-frequency transmission from the satellites, but it had also proved that a higher frequency was needed. The GPS signal needed to be secure and resistant to both jamming and multipath. A spread spectrum, meaning spreading the frequency over a band wider than the minimum required for the information it carries, helped on all counts. This wider band also provided ample space for pseudorandom noise encoding, a fairly new development at the time. The PRN codes like those used in System 621B allowed the GPS receiver to acquire signals from several satellites simultaneously and still distinguish them from one another.

Expense and Frequency Allocation

The U.S. Department of Defense (DOD) also wanted the new system to be free from the sort of ambiguity problems that had plagued OMEGA and other radar systems. And DOD did not want the new system to require large, expensive equipment like the optical systems. Finally, frequency allocation was a consideration. The replacement of existing systems would take time, and with so many demands on the available radio spectrum, it was going to be hard to find frequencies for GPS.

Large Capacity Signal

Not only did the specifications for GPS evolve from the experience with earlier positioning systems, so did much of the knowledge needed to satisfy them. Providing 24- hour real-time, high-accuracy navigation for moving vehicles in three dimensions was a tall order. Experience showed part of the answer was a signal that was capable of carrying a large amount of information efficiently and that required a large bandwidth, especially since the system needed to be passive. So, the GPS signal was given a double-sided 10-MHz bandwidth. But that was still not enough, so the idea of simultaneous observation of several satellites was also incorporated into the GPS system to accommodate the requirement. Unlike some of its predecessors, GPS needed to have not one, but at least four satellites above an observer's horizon for adequate positioning. Even more, if possible. And the achievement of full-time worldwide GPS coverage would require this condition be satisfied at all times, anywhere on or near the earth. That decision had far-reaching implications.

The Perfect System?

The absolute ideal navigational system, from the military’s point of view, was described in the Army POS/NAV Master Plan in 1990. It should have worldwide and continuous coverage. The users should be passive. In other words, they should not be required to emit detectable electronic signals to use the system. The ideal system should be capable of being denied to an enemy, and it should be able to support an unlimited number of users. It should be resistant to countermeasures and work in real time. It should be applicable to joint and combined operations. There should be no frequency allocation problems. It should be capable of working on common grids or map datums appropriate for all users. The positional accuracy should not be degraded by changes in altitude or by the time of day or year. Operating personnel should be capable of maintaining the system. It should not be dependent on externally generated signals, and it should not have decreasing accuracy over time or the distance traveled. Finally, it should not be dependent on the identification of precise locations to initiate or update the system. A pretty tall order; GPS lives up to most, though not all, of the specifications.

The objective, of course, was to build the perfect system. And whether or not that's been achieved, there are several of the specifications that have been met rather well with GPS. It's a passive system—there's no need to broadcast any signals. It can be denied—it can be turned off—when the Department of Defense so desires. It's resistant to countermeasures, meaning—spoofing, for example, is a term used to indicate the idea that a bad guy could broadcast a signal that appears to be a GPS signal to try to confuse the receivers.

It works in real time. It should be applicable to joint and combined operations, militarily speaking. And it should be able to work on common grids that are appropriate for all users. This is very true. One of the things that GPS is very good at is taking its native format—in Earth-Centered, Earth-Fixed XYZ coordinates—and transforming them using the microprocessor and the receiver to virtually any well-defined coordinate system. The positional accuracy should not be degraded by changes in altitude nor by the time of the year—time of day or year. Yes, GPS works well at the top of the mountain and down in the valley—well, except for multipath to some degree. And operating personnel should be capable of maintaining the system. It should not be dependent on externally-generated signals. Of course, that's true—the signals come directly from the satellites. It should not have decreasing accuracy over time and distance traveled. GPS has been extraordinarily effective on measuring very long baselines. It's all a pretty tall order, but GPS has lived up to most of it.

GPS

GPS IN CIVILIAN SURVEYING

As mentioned earlier, application to civilian surveying was not part of the original concept of GPS. The civilian use of GPS grew up through partnerships between various public, private, and academic organizations. Nevertheless, while the military was still testing its first receivers, GPS was already in use by civilians. Geodetic surveys were actually underway with commercially available receivers early in the 1980s.

Federal Specifications

The Federal Radionavigation Plan of 1984, a biennial document including official policies regarding radionavigation set the predictable and repeatable accuracy of civil and commercial use of GPS at 100 meters horizontally and 156 meters vertically. This specification meant that the C/A code ranging for Standard Positioning Service could be defined by a horizontal circle with a radius of 100 meters, 95% of the time. GPS was decidedly a Defense Department system. However, it became apparent early on that civilian receivers were doing much better than 100 meters horizontally. Selective Availability, a dithering of the clocks in the GPS satellite, was instituted. When the clocks in the satellite (the oscillators) don't keep their rate to a steady standard, then the positioning derived from them suffers. In this way, Selective Availability intentionally degraded the accuracy available to the civilian C/A code users to the 100 meter horizontally for quite a long time.

Selective Availability was switched off May 2, 2000. It is not a consideration at the moment, but it could be switched back on. I must mention that since surveyors traditionally worked with the carrier phase observable to achieve high accuracy, therefore the dithering of the pseudorange codes which was the characteristic of SA didn't affect surveying positioning. However, for those who used pseudorange (code-phase) receivers primarily, Selective Availability was a problem. Since it has been switched off, of course, the accuracy of those sorts of receivers has improved markedly.

Interferometry

By using interferometry, the technique that had worked so well with Prime Minitrack and VLBI, civilian users were showing that GPS surveying was capable of extraordinary results. In the summer of 1982, a research group at the Massachusetts Institute of Technology (MIT) tested an early GPS receiver and achieved accuracies of 1 and 2 ppm of the station separation. Over a period of several years, extensive testing that confirmed and improved on these results was conducted around the world. In 1984, a GPS network was produced to control the construction of the Stanford Linear Accelerator. This GPS network provided accuracy at the millimeter level. In other words, by using the differentially corrected carrier phase observable instead of code ranging, private firms and researchers were going far beyond the accuracies the U.S. Government expected to be available to civilian users of GPS.

The interferometric solutions made possible by computerized processing developed with earlier extraterrestrial systems were applied to GPS by the first commercial users. The combination made the accuracy of GPS its most impressive characteristic, but it hardly solved every problem. For many years, the system was restricted by the shortage of satellites as the constellation slowly grew. The necessity of having four satellites above the horizon restricted the available observation sessions to a few, sometimes inconvenient, windows of time. Another drawback of GPS for the civilian user was the cost and the limited application of both the hardware and the software. GPS was too expensive and too inconvenient for everyday use, but the accuracy of GPS surveying was already extraordinary in the beginning.

The photo is of an old friend of mine. This was the first commercial GPS receiver, the Macrometer. It was an interferometric receiver. You see here the receiver itself on the floor next to the tripod. It was rather heavy. It took two people to carry it. We transported them in utility vehicles adapted for the purpose. Each Macrometer observed the GPS constellation simultaneously with the other Macrometers. This is the concept we discussed as resulting in relative positioning. Relative positioning requires receivers operating simultaneously. With the Macrometer, unlike current GPS receivers, they had to be brought together and their clocks synced. In other words, the several receivers were cabled together, and their oscillators synced with each other. This had to be done to discover the receiver clock drift that occurred during the mission. It was necessary because the Macrometer was a codeless receiver. It did not have access to the Navigation Message. That meant that the broadcast clock correction and all of the other bias mitigating information in the Navigation Message were unavailable to this particular receiver. All of that had to be done in the post-processing phase.

The antenna of the Macrometer was a meter square of aluminum. You can see the plexiglass dome with a dipole antenna on the underneath of it. And of course, the antenna was cabled to the receiver. You can see the black tape drives on the top of the receiver. We used tapes to record the observations. The system ran on 110 volts. So, we had to carry generators with us. It was quite a beefy system to carry around and quite expensive. Nevertheless, it worked rather well. It was possible to achieve one to two parts per million of the station separation, which was much more accurate than the 100-meter spec we were talking about earlier. This was possible because the system utilized the carrier phase—aka the carrier beat phase—observable. GPS achieved extraordinary accuracy from the very beginning, from the very first commercial GPS receiver. I was proud to be part of it.

Civil Applications of GPS

Today, with a mask angle of 10°, there are some periods when 15 or more GNSS satellites are above the horizon. And GPS receivers have grown from only a handful to the huge variety of receivers available today. Some push the envelope to achieve ever-higher accuracy; others offer less sophistication and lower cost. The civilian user’s options are broader with GPS than any previous satellite positioning system—so broad that, as originally planned, GPS will likely replace more of its predecessors in both the military and civilian arenas. In fact, GPS has developed into a system that has more civilian applications and users than military ones. But the extraordinary range of GPS equipment and software requires the user to be familiar with an ever-expanding body of knowledge, including the Global Navigation Satellite System (GNSS). GNSS includes both GPS and satellite navigation systems built by other nations and will present new options for users. However, the three segments of GPS will be presented before elaborating on GNSS.

Continuing on with the civilian point of view, which, of course, is our primary focus; today, with a mask angle of 10 degrees, it's possible to have up to 10 satellites above the horizon. Of course, when we first started, we were very happy when we were able to have four. So, it's obvious that as time goes on, the cost of the receivers keeps going down and their sophistication going up. And GPS, clearly, has been replacing its predecessors in both the military and civilian arenas. In fact, GPS has developed into a system that has more civilian applications and users than military ones. It's really a remarkable story of how rapidly the system has realized its potential.

The Space Segment

Though there has been some evolution in the arrangement, the current GPS constellation under full operational capability consists of 24 satellites. However, there are more satellites than that in orbit and broadcasting at any given time, and the constellation includes several orbital spares.

As the primary satellites aged and their failure was possible, spares were launched. One reason for the arrangement was to maintain the 24 satellite constellation without interruption.

It was also done to ensure that it is possible to keep four satellites, one in each of the four slots in the six orbital planes. Each of these planes is inclined to the equator by 55° in a symmetrical, uniform arrangement. Such a uniform design does cover the globe completely, even though the coverage is not quite as robust at high latitudes as it is at midlatitudes. The uniform design also means that multiple satellite coverage is available even if a few satellites were to fail. The satellites routinely outlast their anticipated design lives, but they are eventually worn out.

Orbital Period

NAVSTAR satellites are more than 20,000 km above the earth in a posigrade orbit. A posigrade orbit is one that moves in the same direction as the earth’s rotation.

The Space Segment includes the satellites themselves. Since each satellite is nearly three times the earth’s radius above the surface, its orbital period is 12 sidereal hours. The sidereal (star time) timescale is different from Mean Solar Time (the normal timescale on which we operate) and different from UTC.

4 minute difference. When an observer actually performs a GPS survey project, one of the most noticeable aspects of a satellite’s motion is that it returns to the same position in the sky about 4 minutes (3 minutes and 56 seconds) earlier each day in our usual Mean Solar Time. This apparent regression is attributable to the difference between 24 solar hours and 24 sidereal hours. This rather esoteric fact has practical applications; for example, if the satellites are in a particularly favorable configuration for measurement, the observer may wish to take advantage of the same arrangement the following day. However, he or she would be well advised to remember the same configuration will occur about 4 minutes earlier on the solar timescale. Both Universal Time (UT) and GPS time are measured in solar, not sidereal units. It is possible that the satellites will be pushed 50 km higher in the future to remove their current 4-minute regression, but, for now, it remains. As mentioned earlier, the GPS constellation was designed to satisfy several critical concerns. Among them were the best possible coverage of the earth with the fewest number of satellites, the reduction of the effects of gravitational and atmospheric drag, sufficient upload, monitoring capability, and the achievement of maximum accuracy.

The Space Segment: Dilution of Precision

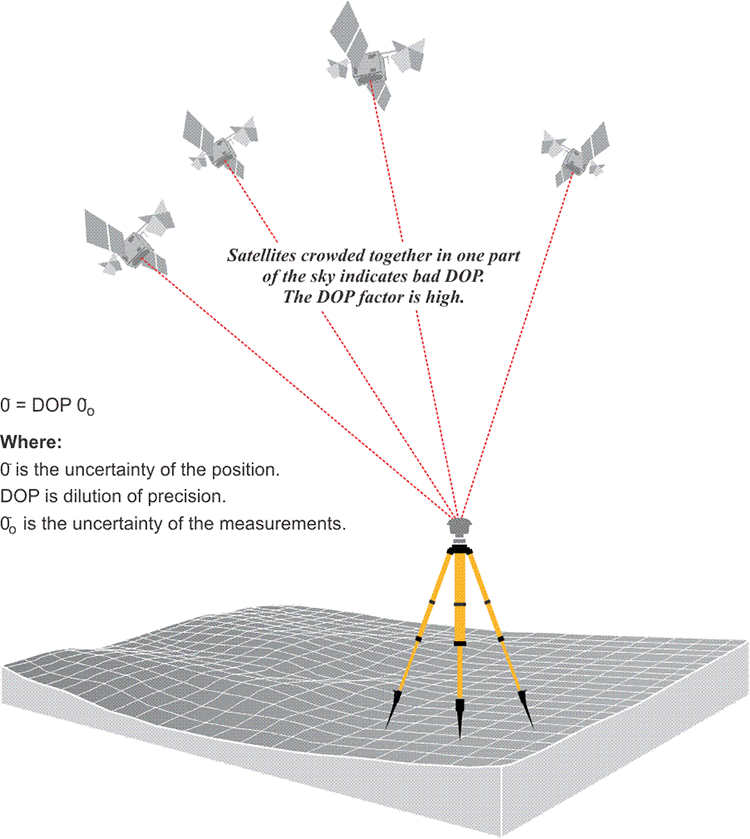

The distribution of the satellites above an observer’s horizon has a direct bearing on the quality of the position derived from them. Like some of its forerunners, the accuracy of a GPS position is subject to a geometric phenomenon called dilution of precision (DOP). This number is somewhat similar to the strength of figure consideration in the design of a triangulation network. DOP concerns the geometric strength of the figure, described by the positions of the satellites with respect to one another and the GPS receivers. A low DOP factor is good, a high DOP factor is bad. In other words, when the satellites are in the optimal configuration for a reliable GPS position, the DOP is low, when they are not, the DOP is high.

Four or more satellites must be above the observer’s mask angle for the simultaneous solution of the clock offset and three dimensions of the receiver’s position. But if all of those satellites are crowded together in one part of the sky, the position would be likely to have an unacceptable uncertainty, and the DOP, or dilution of precision, would be high. In other words, a high DOP is a like a warning that the actual errors in a GPS position are liable to be larger than you might expect. But remember, it is not the errors themselves that are directly increased by the DOP factor; it is the uncertainty of the GPS position that is increased by the DOP factor.

Now, since a GPS position is derived from a three-dimensional solution, there are several DOP factors used to evaluate the uncertainties in the components of a GPS position. For example, there is horizontal dilution of precision (HDOP) and vertical dilution of precision (VDOP) where the uncertainty of a solution for positioning has been isolated into its horizontal and vertical components, respectively. When both horizontal and vertical components are combined, the uncertainty of three-dimensional positions is called position dilution of precision (PDOP). There is also time dilution of precision (TDOP), which indicates the uncertainty of the clock. There is geometric dilution of precision (GDOP), which is the combination of all of the above. And finally, there is relative dilution of precision (RDOP), which includes the number of receivers, the number of satellites they can handle, the length of the observing session, as well as the geometry of the satellites’ configuration.

PDOP is perhaps the most common, which combines both horizontal and vertical. But the idea is very straightforward in the sense that the better the geometry, the better the intersection of the ranges from the satellites, the lower that the dilution of precision value will be and the better the position derived will be. This is a very practical consideration in GPS work.

The user equivalent range error (UERE) is the total error budget affecting a pseudorange. It is the square root of the sum of the squares of the individual biases discussed in Chapter 2. Using a calculation like that mentioned, the PDOP (position dilution of precision) factor can be used to find the positional error that will result from a particular UERE at the one sigma level (68.27%). For example, supposing that the PDOP factor is 1.5 and the UERE is 6 m, the positional accuracy would be 9 m at the 1 sigma level (68.27%). In other words, the standard deviation of the GPS position is the dilution of precision factor multiplied by the square root of the sum of the squares of the individual biases (UERE). Multiplying the 1 sigma value times 2 would provide that 95% level of reliability in the error estimate, which would be 18 m.

The size of the DOP factor is inversely proportional to the volume of the tetrahedron described by the satellites' positions and the position of the receiver. The larger the volume of the body defined by the lines from the receiver to the satellites, the better the satellite geometry and the lower the DOP. An ideal arrangement of four satellites would be one directly above the receiver, the others 120° from one another in azimuth near the horizon. With that distribution, the DOP would be nearly 1, the lowest possible value. In practice, the lowest DOPs are generally around 2. The mask angle plays a part here. If you had four satellites, and three of them were at the horizon and one was directly overhead, this would be a very low dilution of precision value. However, you wouldn't want to track satellites that were right against the horizon. You want them above this mask angle, 10 or 15 degree mask angle, to try to minimize the effect of the ionosphere. The users of most GPS receivers can set a PDOP mask to guarantee that data will not be logged if the PDOP goes above the set value. A typical PDOP mask is 6. As the PDOP increases, the accuracy of the pseudorange positions probably deteriorate, and as it decreases, they probably improve. When a DOP factor exceeds a maximum limit in a particular location, indicating an unacceptable level of uncertainty exists over a period of time, that period is known as an outage. This expression of uncertainty is useful both in interpreting measured baselines and planning a GPS survey.

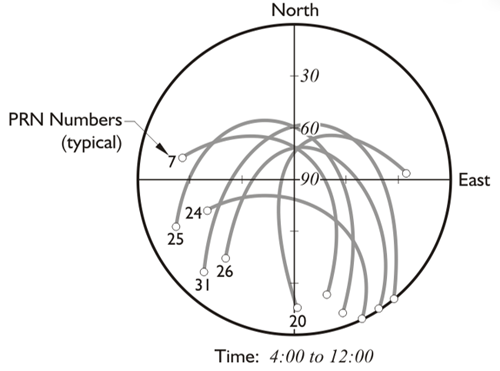

The Space Segment: Planning

The position of the satellites above an observer’s horizon is a critical consideration in planning a GPS survey. So, most software packages provide various methods of illustrating the satellite configuration for a particular location over a specified period of time. For example, the configuration of the satellites over the entire span of the observation is important; as the satellites move, the DOP changes. Fortunately, the dilution of precision can be worked out in advance. DOP can be predicted. It depends on the orientation of the GPS satellites relative to the GPS receivers. And since most GPS software allow calculation of the satellite constellation from any given position and time, they can also provide the accompanying DOP factors. Another commonly used plot of the satellite’s tracks is constructed on a graphical representation of the half of the celestial sphere. The observer’s zenith is shown in the center and the horizon on the perimeter. The program usually draws arcs by connecting the points of the instantaneous azimuths and elevations of the satellites above a specified mask angle. These arcs then represent the paths of the available satellites over the period of time and the place specified by the user. The plot of the polar coordinates of the available satellites with respect to time and position is just one of several tables and graphs available to help the GPS user visualize the constellation.

It has become common to think that you can simply turn on a GPS receiver and start working under all circumstances. However, the position of the satellites in the sky is critical to good positioning. A polar plot, as illustrated here, shows you the paths of the satellites as if you were looking straight up.

Another useful graph that is available from many software packages. It shows the correlation between the number of satellites above a specified mask angle and the associated PDOP for a particular location during a particular span of time.

There are four spikes of unacceptable PDOP, labeled here for convenience. It might appear at first glance that these spikes are directly attributable to the drop in the number of available satellites. However, please note that while spikes 1 and 4 do indeed occur during periods of 4 satellite data, spikes 2 and 3 are during periods when there are 7 and 5 satellites available, respectively. It is not the number of satellites above the horizon that determine the quality of GPS positions, one must also look at their position relative to the observer, the DOP, among other things. The variety of the tools to help the observer predict satellite visibility underlines the importance of their configuration to successful positioning.

The number of satellites is in the upper part of the chart. The PDOP, position dilution of precision, is in the lower part. Spikes one, two, three and four are areas of very large PDOP. During these moments, the positions that are collected are suspect. Starting at zero hour, the satellites vary between seven and eight, some of them begin to set at two hours. The constellation drops down to four satellites. The four that remain are crowded into one part of the sky. There's a rather extraordinary spike in the PDOP at that point. You wouldn't want to be observing at that time. Shortly thereafter, some satellites rise and the constellation goes back to eight and nine satellites; the PDOP drops below four, and that is a good time to observe.

However, even when there appear to be many satellites above the observer's horizon, there are more spikes in the PDOP. You may wonder why there are spikes if there are so many satellites. It is because the satellites are always moving relative to each other. And even though there are many, they can be crowded together and the PDOP high. At about 12 hours and moving on, the circumstances for observation are good. There are many satellites, the constellation is healthy, above the horizon, and the PDOP is low. However, between 18 and 20 hours, there are just four satellites again, and there is a spike in the PDOP.

So, as you see, it's not really straightforward. The PDOP can be high at any time. It is not correct to say that when the constellation above the horizon is large, the PDOP will be low. It is important to look at it in some detail. It's valuable to note that most processing software packages allow you to easily to make graphs of this type shown here if you have a current ephemeris in your system. And it's lucky that those current ephemerides can be downloaded from the Internet as well as collected from the satellites.

Satellite Blocks

The Control Segment

There are government tracking and uploading facilities distributed around the world. These facilities not only monitor the L-band signals from the GPS satellites and update their Navigation Messages but also track the satellite’s health, their maneuvers, and many other things, even battery recharging. Taken together, these facilities are known as the Control Segment.

The Master Control Station (MCS), once located at Vandenberg Air Force Base in California, now resides at the Consolidated Space Operations Center (CSOC) at Schriever (formerly Falcon) Air Force Base near Colorado Springs, Colorado, and has been manned by the 2nd Space Operations Squadron, 2SOPS, since 1992. There is an alternate MCS at Vandenberg Tracking Station in California. The 2SOPS squadron controls the satellites' orbits. For example, they maneuvered the earlier blocks of satellites from the highly eccentric orbits into which they are originally launched to the desired mission orbit and spacecraft orientation. The Block IIF satellites were placed directly into their intended orbits. They monitor the state of each satellite's onboard battery, solar, and propellant systems. They resolve satellite anomalies, activate spare satellites, and control Selective Availability (SA) and Anti-Spoofing (A/S). They dump the excess momentum from the wheels, the series of gyroscopic devices that stabilize each satellite. With the continuous constellation tracking data available and aided by Kalman filter estimation to manage the noise in the data, they calculate and update the parameters in the Navigation message (ephemeris, almanac, and clock corrections) to keep the information within limits. This process is made possible by a persistent two-way communication with the constellation managed by the Control Segment that includes both monitoring and uploading accomplished through a network of ground antennas and monitoring stations.

The data that feeds the MCS comes from monitoring stations. These stations track the entire GPS constellation. In the past, there were limitations. There were only six tracking stations. It was possible for a satellite to go unmonitored for up to two hours each day. It was clear that the calculation of the ephemerides and the precise orbits of the constellation could be improved with more monitoring stations in a wider geographical distribution. It was also clear that if one of the six stations went down, the effectiveness of the Control Segment could be considerably hampered. These ideas, and others, led to a program of improvements known as the Legacy Accuracy Improvement Initiative, L-AII. During this initiative from August 18 to September 7 of 2005, six National Geospatial Intelligence Agency, NGA, stations were added to the Control Segment. This augmented the information forwarded to the MCS with data from Washington, D.C., England, Argentina, Ecuador, Bahrain, and Australia. With this 12-station network in place, every satellite in the GPS constellation was monitored almost continuously from at least two stations when it reached at least 5º above the horizon.

Today, there are 6 Air Force and the 11 National Geospatial-Intelligence Agency (NGA) monitoring stations. The monitoring stations track all the satellites; in fact, every GPS satellite is tracked by at least 3 of these stations all the time. The monitoring stations collect range measurements, atmospheric information, satellite's orbital information, clock errors, velocity, right ascension, and declination and send them to the MCS. They also provide pseudorange and carrier phase data to the MCS. The MCS needs this constant flow of information. It provides the basis for the computation of the almanacs, clock corrections, ephemerides, and other components that make up the Navigation message. The new stations also improve the geographical diversity of the Control Segment, and that helps with the MCS isolation of errors, for example, making the distinction between the effects of the clock error from ephemeris errors. In other words, the diagnosis and solution of problems in the system are more reliable now because the MCS has redundant observations of satellite anomalies with which to work. Testing has shown that the augmented Control Segment and subsequent improved modeling has improved the accuracy of clock corrections and ephemerides in the Navigation Message substantially, and may contribute to an increase in the accuracy of real-time GPS of 15% or more. Once the message is calculated, it needs to be sent back up to the satellites. Some of the stations have ground antennas for uploading. Four monitoring stations are collocated with such antennas. The stations at Ascension Island, Cape Canaveral, Diego Garcia, and Kwajalein upload navigation and program information to the satellites via S-band transmissions. The station at Cape Canaveral also has the capability to check satellites before launch. The modernization of the Control Segment has been underway for some time, and it continues. In 2007, the Launch/Early Orbit, Anomaly Resolution and Disposal Operations mission (LADO) PC-based ground system replaced the mainframe based Command-and-Control System (CCS). Since then, LADO has been upgraded several times. It uses Air Force Satellite Control Network (AFSCN) remote tracking stations only, not the dedicated GPS ground antennas to support the satellites from spacecraft separation through checkout, anomaly resolution, and all the way to end of life disposal. It also helps in the performance of satellite movements and the presentation of telemetry simulations to GPS payloads and subsystems. Air Force Space Command (AFSPC) accepted the LADO capability to handle the most modern GPS satellites at the time, the Block IIF, in October 2010.

Another modernization program is known as the Next Generation Operational Control System or OCX. OCX will facilitate the full control of the new GPS signals like L5, as well as L2C and L1C and the coming GPS III program.

Kalman Filtering

Click here to see a text description.

Kalman filtering is named for Rudolf Emil Kalman’s linear recursive solution for least-squares filtering. It is used to smooth the effects of system and sensor noise in large datasets. In other words, a Kalman filter is a set of equations that can tease an estimate of the actual signal, meaning the signal with the minimum mean square error, from noisy sensor measurements. Kalman filtering is used to ensure the quality of some of the Master Control Station (MCS) calculations, and many GPS/GNSS receivers utilize Kalman filtering to estimate positions. Kalman filtering can be illustrated by the example of an automobile speedometer. Imagine the needle of an automobile’s speedometer that is fluctuating between 64 and 72 mph as the car moves down the road. The driver might estimate the actual speed at 68 mph. Although not accepting each of the instantaneous speedometer’s readings literally, the number of them is too large, he has nevertheless taken them into consideration and constructed an internal model of his velocity. If the driver further depresses the accelerator and the needle responds by moving up, his reliance on his model of the speedometer’s behavior increases. Despite its vacillation, the needle has reacted as the driver thought it should. It went higher as the car accelerated. This behavior illustrates a predictable correlation between one variable, acceleration, and another, speed. He is more confident in his ability to predict the behavior of the speedometer. The driver is illustrating adaptive gain, meaning that he is fine-tuning his model as he receives new information about the measurements. As he does, a truer picture of the relationship between the readings from the speedometer and his actual speed emerges, without recording every single number as the needle jumps around. The driver’s reasoning in this analogy is something like the action of a Kalman filter. Without this ability to take the huge amounts of satellite data and condense it into a manageable number of components, GPS/GNSS processors would be overwhelmed. Kalman filtering is used in the uploading process to reduce the data to the satellite clock offset and drift, 6 orbital parameters, 3 solar radiation pressure parameters, biases of the monitoring station's clock, and a model of the tropospheric effect and earth rotational components.

The User Segment

In the early years of GPS, the military concentrated on testing navigation receivers. But civilians got involved much sooner than expected and took a different direction: receivers with geodetic accuracy. Some of the first GPS receivers in commercial use were single frequency, six channel, and codeless instruments. Their measurements were based on interferometry. As early as the 1980s, those receivers could measure short baselines to high accuracy and long baselines to 1 ppm. It is true the equipment was cumbersome, expensive, and, without access to the Navigation message, dependent on external sources for clock and ephemeris information. They were the first at work in the field commercially. During the same era, a parallel trend was underway. The idea was to develop a more portable, dual-frequency, four-channel receiver that could use the Navigation message. Such an instrument did not need external sources for clock and ephemeris information and could be more self-contained. Unlike the original codeless receivers that required all units on a survey brought together and their clocks synchronized twice a day, these receivers could operate independently. And while the codeless receivers needed to have satellite ephemeris information downloaded before their observations could begin, this receiver could derive its ephemeris directly from the satellite’s signal. Despite these advantages, the instruments developed on this model still weighed more than 40 pounds, were very expensive. A few years later, a different kind of multichannel receiver appeared. Instead of using the L1 and L2 frequencies, it depended on L1 alone. And on that single frequency, it tracked the C/A code and also measured the carrier phase observable. This type of receiver established the basic design for the many of the GPS receivers in use today. They are multichannel receivers, and they can recover all of the components of the L1 signal. The C/A code is used to establish the signal lock and initialize the tracking loop. Then, the receiver not only reconstructs the carrier wave, it also extracts the satellite clock correction, ephemeris, and other information from the Navigation message. Such receivers are capable of measuring pseudoranges, along with the carrier phase and integrated Doppler observables. Still, as some of the earlier instruments illustrated, the dual-frequency approach does offer significant advantages. It can be used to limit the effects of ionospheric delay, it can increase the reliability of the results over long baselines, and it certainly increases the scope of GPS procedures available to a surveyor. For these reasons, a substantial number of receivers utilize both frequencies. Dual or multi frequency receivers are the standard for geodetic applications of GPS.

The military planned, built and continues to maintain GPS. It is, therefore, no surprise that a large component of the user segment of the system is military. While surveyors and geodesists have the distinction of being among the first civilians to incorporate GPS into their practice and are sophisticated users, their number is limited. In fact, it is quite small compared with the explosion of applications the technology has now among the general public. The use of GPS in positioning, navigation and timing (PNT) applications in precision agriculture, machine control, aviation, railroads, finance, autonomous vehicles and the marine industry is ubiquitous. With GPS incorporated into smart phones and navigation systems, it is no exaggeration that GPS technology has transformed civilian businesses and lifestyles around the world. The uses the general public finds for GPS will undoubtedly continue to grow as the cost and size of the receivers continues to shrink.

Since it was instituted, GPS has become a utility. The user segment is growing, so dramatically it's hard to conceptualize it. It will continue and be augmented by GNSS, which we'll talk more about in the upcoming lessons.

Discussion and Quiz

Discussion Instructions

To continue the discussion begun by this lesson, I would like to pose this question:

Providing 24-hour worldwide real-time, high-accuracy navigation for both stationary and moving platforms in three dimensions was a tall order in the 1970s. What are a couple of technological elements that became incorporated into GPS that helped achieve DODs specifications for the system- and from where did they originate?

To participate in the discussion, please go to the Lesson 3 Discussion Forum in Canvas. (That forum can be accessed at any time by going to the GEOG 862 course in Canvas and then looking inside the Lesson 3 module.)

Lesson 3 Quiz

This quiz will cover Lessons 1, 2, and 3.

The Lesson 3 Quiz is located in the Lesson 3 module in Canvas.

Summary

The uses the general public finds for GPS will undoubtedly continue to grow as the cost and size of the receivers continues to shrink. The number of users in surveying will be small when compared with the large numbers of trains, cars, boats and airplanes with GPS receivers. GPS will be used to position all categories of civilian transportation, as well as law enforcement, and emergency vehicles. Nevertheless, surveying and geodesy have the distinction of being the first practical application of GPS, and the most sophisticated uses and users are still under its purview. That situation will likely continue for some time.

The number, range, and complexity of GPS receivers has increased in recent years. There are widely varying prices and features that sometimes make it difficult to match the equipment with the application. Part of Lesson 4 will be devoted to a detailed discussion of the GPS receivers themselves.

Before you go on to Lesson 4, double-check the Lesson 3 Checklist to make sure you have completed all of the activities listed there.