The origins of commercial multispectral remote sensing can be traced to interpretation of natural color and color infrared (CIR) aerial photography in the early 20th century. CIR film was developed during World War II as an aid in camouflage detection (Jensen, 2007). It also proved to be of significant value in locating and monitoring the condition of vegetation. Healthy green vegetation shows up in shades of red; deep, clear water appears dark or almost black; concrete and gravel appear in shades of grey. CIR photography captured under the USGS National Aerial Photography Program(link is external) was manually interpreted to produce National Wetlands Inventory (NWI) maps for much of the United States. While film is quickly being replaced by direct digital acquisition, most digital aerial cameras today are designed to replicate these familiar natural color or color-infrared multispectral images.

Computer monitors are designed to simultaneously display 3 color bands. Natural color image data is comprised of red, green, and blue bands. Color infrared data is comprised of infrared, red, and green bands. For multispectral data containing more than 3 spectral bands, the user must choose a subset of 3 bands to display at any given time, and furthermore must map those 3 bands to the computer display in such as way as to render an interpretable image. Module 2 of the Esri Virtual campus course, “Working with Rasters in ArcGIS Desktop,” gives a good overview of the display of multiband rasters and common 3-band combinations of multiband data sets from sensors such as Landsat and SPOT.

Spaceborne Sensors

Since the 1967 inception of the Earth Resource Technology Satellite (ERTS) program (later renamed Landsat), mid-resolution spaceborne sensors have provided the vast majority of multispectral datasets to image analysts studying land use/land cover change, vegetation and agricultural production trends and cycles, water and environmental quality, soils, geology, and other earth resource and science problems. Landsat has been one of the most important sources of mid-resolution multispectral data globally. The history of the program and specifications for each of the Landsat missions is covered in Chapter 6 of Campbell (2011).

The French SPOT satellites have been another important source of high-quality, mid-resolution multispectral data. The imagery is sold commercially, and is significantly more expensive than Landsat. SPOT can also collect stereo pairs; images in the pair are captured on successive days by the same satellite viewing off-nadir. Collection of stereo pairs requires special control of the satellite; therefore, the availability of stereo imagery is limited. Both traditional photogrammetric terrain extraction techniques, as well as automatic correlation, can be used to create topographic data in inaccessible areas of the world, especially where a digital surface model may be an acceptable alternative to a bare-earth elevation model.

Digital Globe (QuickBird and WorldView) and GeoEye (IKONOS and OrbView) collect high-resolution multispectral imagery which is sold commercially to users throughout the world. US Department of Defense users and partners have access to these datasets through commercial procurement contracts; therefore, these satellites are quickly becoming a critical source of multispectral imagery for the geospatial intelligence community. Bear in mind that the trade-off for high spatial resolution is limited geographic coverage. For vast areas, it is difficult to obtain seamless, cloud-free, high-resolution multispectral imagery within the single season or at the particular moment of the phenological cycle of interest to the researcher.

Airborne Sensors

Digital aerial cameras were developed to replicate and improve upon the capabilities of film cameras; therefore, most commercially available medium and large-format mapping cameras produce panchromatic, natural color, and color-infrared imagery. They are, in fact, multispectral remote sensing systems. Most are based on two-dimensional area arrays. The Leica Geosystems ADS-40, which makes use of linear array technology, is the exception. This sensor was described in some detail in Lesson 2. The unique design of this instrument allows it to capture stereoscopic imagery in a single pass, but georeferencing of the linear array data is more complex than for a frame image.

The ADS-40 and the Z/I Digital Modular Camera (DMC) are being used extensively in the USDA NAIP program to capture high-resolution multispectral data over most of the conterminous United States each growing season. Be aware, however, that NAIP data, other than the fact that it is orthorectified to National Digital Orthophoto Program (NDOP) standards, is not extensively processed or radiometrically calibrated. The USDA uses it primarily for visual verification and interpretation, not for digital classification.

Georeferencing

Georeferencing an analog or digital photograph is dependent on the interior geometry of the sensor as well as the spatial relationship between the sensor platform and the ground. The single vertical aerial photograph is the simplest case; we can use the internal camera model and six parameters of exterior orientation (X, Y, Z, roll, pitch, and yaw) to extrapolate a ground coordinate for each identifiable point in the image. We can either compute the exterior orientation parameters from a minimum of 3 ground control points using space resection equations, or we can use direct measurements of the exterior orientation parameters obtained from GPS and IMU.

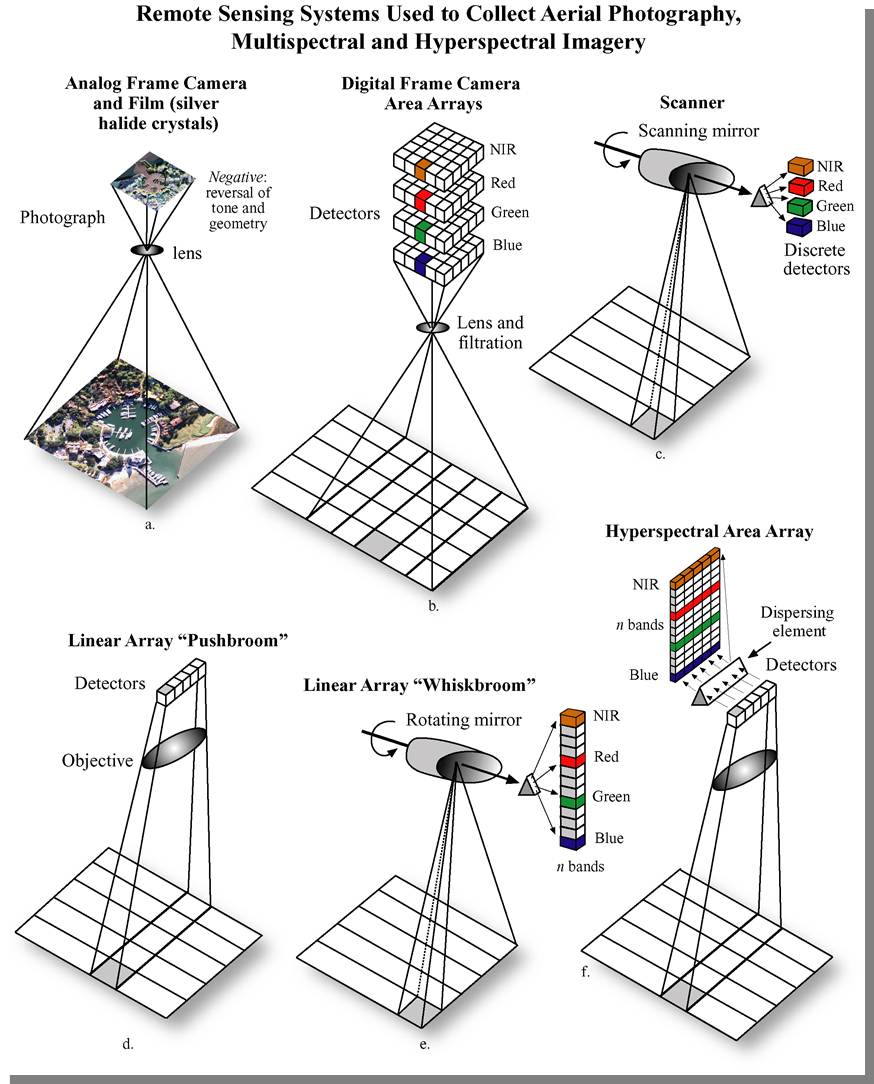

The internal geometry of design of a spaceborne multispectral sensor is quite different from an aerial camera. The figure below (from Jensen, 2007, Remote Sensing of the Environment) shows six types of remote sensing systems, comparing and contrasting those using scanning mirrors, linear pushbroom arrays, linear whiskbroom areas, and frame area arrays. The digital frame area array is analogous to the single vertical aerial photograph.

A linear array, or pushbroom scanner, is used in many spaceborne imaging systems, including SPOT, IRS, QuickBird, OrbView, and IKONOS. The position and orientation of the sensor are precisely tracked and recorded in the platform ephemeris. However, other geometric distortions, such as skew caused by the rotation of the earth, must be corrected before the imagery can be referenced to a ground coordinate system.

Several airborne systems, the Leica ADS-40 and the ITRES CASI, SASI, and TABI, also employ the pushbroom design. Each line of imagery is captured at a unique moment in time, corresponding with an instantaneous position and attitude of the aircraft. When direct georeferencing is integrated with these sensors, each single line of imagery has the exterior orientation parameters needed for rectification. However, without direct georeferencing, it is impossible to reconstruct the image geometry; the principles of space resection only apply to a rigid two-dimensional image.

The internal geometry of images captured by spaceborne scanning systems is much more complex. Across-track scanning and whiskbroom systems are more akin to a lidar scanner than to a digital area array imager. Each pixel is captured at a unique moment in time; the instantaneous position of the scanning device must also be factored into the image rectification. For this reason, a unique (and often proprietary) sensor model must be applied to construct a coherent two-dimensional image from millions of individual pixels. Add to this complexity the fact that there is actually a stack of recording elements, one for each spectral band, and that all must be precisely co-registered pixel-for-pixel to create a useful multiband image.

Direct georeferencing solves a large part of the image rectification problem, but not all of it. Remember, in our discussions of space resection and intersection, we learned that we can only extrapolate an accurate coordinate on the ground when we actually know where the ground is in relationship to the sensor and platform. We need some way to control the scale of the image. Either we need stereo pairs to generate intersecting light rays, or we need some known points on the ground. A georeferenced satellite image can be orthorectified if an appropriate elevation model is available. The effects of relief displacement are often less pronounced in satellite imagery than in aerial photography, due to the great distance between the sensor and the ground. It is not uncommon for scientists and image analysts to make use of satellite imagery that has been registered or rectified, but not orthorectified. If one is attempting to identify objects or detect change, the additional effort and expense of orthorectification may not be necessary. If precise distance or area measurements are to be made, or if the analysis results are to be used in further GIS analysis, then orthorectification may be important. It is important for the analyst to be aware of the effects of each form of georeferencing on the spatial accuracy of his/her analysis results and the implications of this spatial accuracy in the decision-making process.