Common Mechanics Used in VR Development

Most of the mechanics used in desktop experiences can also be used in VR. However, not all of them are best choices for VR experiences. Two of the most prominent examples are locomotion and interaction mechanics. In this section, we will briefly explore the different locomotion and interaction mechanics that are designed specifically for VR experience.

Locomotion in VR

Locomotion can be defined as the ability to move from one place to another. There are many ways in which locomotion can be implemented in games and other virtual experiences. Depending on the employed camera perspective and movement mechanics, the users can move their viewpoint within the virtual space in different ways. Obviously, there are fundamental differences in locomotion possibilities when comparing 2D, 2.5D, and 3D experiences. Even within the category of 3D experiences, locomotion can take many different forms. To give you a very general comparison, consider the following:

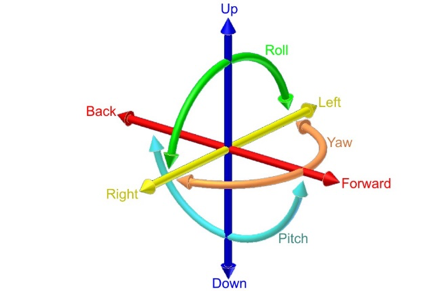

Locomotion in 2D games is limited to the confines of the 2D space (X and Y axes). The camera used in 2D games employs an orthogonal projection. Therefore, the game space is seen as “flat”. In these experiences, users can move a character using mechanics such as “point and click” (as is the case in the most 2D real-time strategy games) or using keystrokes on a keyboard or other types of controllers. The movements of the orthogonal camera in these experiences is also limited to the confines of the 2D space. Consequently, the users will not experience the illusion of perceiving the game through the eyes of the character (e.g. Cuphead(link is external), Super Mario Bros,(link is external) etc.). Evidently, the same type of locomotion can be employed in the 3D space as well. For instance, in the City Builder game, we have seen in this lesson, the camera uses a perspective projection. However, the locomotion of the user is limited to the two axes of X and Z. The three-dimensional perspective of the camera (viewpoint of the user), however, creates an illusion of depth and a feeling of existing “in the sky”. In more sophisticated 3D games, such as First-person shooters (FPS(link is external)) where it is desired that the players experience the game through the eyes of their character, the feeling of movement is entirely different. We stress the word “feeling” since the logic behind translating (moving) an object within a 2D space compared to a 3D space from one point to another is not that different. However, the differences in the resulting feeling of camera movements are vast (unattached distant orthogonal vs. perspective projection through the eyes of the character). In many modern games (not necessarily shooters) with a first-person camera perspective, the players can be given six degrees of freedom for movement and rotation.

Video: Making an FPS Game with Unity using the Asset Store (3:32)

The FPS Controller we used in the previous lessons is an example of providing such freedom (except for rotation along the Z-axis). The mechanics for the movement in such games, however, are almost all the time through a smooth transition from one point to another. For instance, in the two different locomotion mechanics we used in this course (FPS Controller, and camera movement) you have seen that we gradually increase or decrease the Position and Rotation properties of the Transform component attached to GameObjects. This gradual change of values over time (for as long as we hold down a button for instance) creates the illusion of smoothly moving from one point to another.

As was previously mentioned, there are many ways in which locomotion can be realized in virtual environments, depending on the type and genre of the experience, and the projection of the used camera. Explaining all the different varieties would be outside the scope of this course. Therefore, we will focus on the one that is most applicable in VR.

The experience of Virtual Reality closely resembles a first-person perspective. This is the most effective way of using VR to create an immersive feeling of perceiving a virtual world from a viewpoint natural to us. It does not come as a surprise that in the early days of mainstream VR development, many employed the same locomotion techniques used in conventional first-person desktop experiences in VR. Although we can most definitely use locomotion mechanics such as “smooth transition” in VR, the resulting user-experiences will not be the same. As a matter of fact, doing so will cause a well-known negative effect associated with feelings such as disorientation, eyestrain, dizziness, and even nausea, generally referred to as simulator sickness.

According to Wienrich et al. “Motion sickness usually occurs when a person feels movement but does not necessarily see it. In contrast, simulator sickness can occur without any actual movement of the subject” [1]. One way to interpret this is that simulator sickness is a form of physical-psychological paradox that people experience when they see themselves move in a virtual environment (in this case through VR HMDs) but do not physically feel it. The most widely accepted theory as to why this happens is the “sensory conflict theory” [2]. There are, however, several other theories that try to model or predict simulator sickness (e.g. the poison theory [3], the model of negative reinforcement [4], [5], the eye movement theory [4-5], and the and the postural instability theory [6]). Simulator sickness in VR is more severe in cases where the users must locomote, particularly using smooth transition, over a long distance. As such, different approaches have been researched to reduce this negative experience. One approach suggested by [1] is to include a virtual nose in the experience so the users would have a “rest frame” (a static point that does not move) when they put on the HMD.

Other approaches such as dynamic field of view (FOV) reduction when moving or rotating have also shown to be an effective way to reduce simulator sickness.

In addition to these approaches, novel and tailored mechanics for implementing locomotion, specifically in VR, have also been proposed. Here we will list some of the most popular ones:

- Physical movement: In the earlier version of VR HMDs, no external sensors were used for tracking the position of the users. As such, physically moving around a room and experiencing translation (movement) inside the virtual environment was not easily achievable (in few examples other forms of sensors such as Microsoft Kinect were used for this purpose to some extent). In the newer models of HMDs however, external sensors were added to resolve this shortcoming by providing room-scaled tracking. For instance, Oculus Rift, and HTC Vive both have sensors that can track the position of the user within a specific boundary in a physical space. This allows the users to freely and naturally walk around (as well as rotate, sit, and jump) within that boundary and experience the movement of their perspective in VR. As we have already seen in Lesson 1, the latest generations of HMDs such as Oculus Quest employ the inside out tracking technology which eliminates the need for external sensors. Using these HMDs, the users are not bound to a specified physical confine, and they can freely move around in a much larger physical space. This method of locomotion is the most natural one we can use in VR.

Video: Oculus Insight VR Positional Tracking System (Sep 2018) (02:39)

- Teleportation: teleportation is still considered the most popular locomotion system in VR (although this may change soon due to the emergence of inside out tracking technology). It allows users to jump (teleport) from one location to another inside a virtual environment. There are different types of teleportation as well. The most basic form is instant teleportation where the perspective of the user is jumped from one location to another instantaneously when they point to a location and click on their controller.

Other forms of teleportation include adding effects when moving the user’s perspective from one location to another (e.g. fading, sounds, seeing a project of the avatar move, etc.), or providing a preview of the destination point before actually teleporting to that location:

Another interesting and yet different example of teleportation is the “thrown object teleported”, where instead of pointing at a specific location, the user throws an object (using natural gestures for grabbing and throwing objects in VR as we will discuss in the next section) and then teleport to the location where the object rests.

- Arm swing: this is a semi-natural way to locomote in VR. Users must swing their arms while holding the controllers, and the swinging gesture will translate their perspective in the virtual environment. The general implementation of this mechanic is in such a way that the faster users swing their arms, the fast their viewing perspective moves in the virtual environment. This is a rather useful locomotion mechanic when the users are required to travel a relatively long distance, and you do not want them to miss anything along the way by jumping from point to point.

- Grabbing and locomoting: Imagine a rock-climbing experience in VR, where the user must climb a surface. An arm swing gesture is probably not the best locomotion mechanic in this case to translate the perspective of the user along the Y-axis (as the user climbs up). By colliding with and grabbing GameObjects such as rocks however, the user can locomote on the X, Y, or Z axes in a more natural way. This locomotion mechanic is used in many different VR experiences for climbing ladders, using zip-lines, etc.

- Dragging: this is a particularly interesting and useful locomotion technique, specifically for situations where the user a top-down (overview) perspective of the virtual environment. Consider a virtual experience where the size of the user is disproportionate to the environment (i.e. they are a giant) and they need to navigate over a large terrain. One way to implement locomotion in such a scenario is to enable users to grab the “world” and drag their perspective along the X or Z axes.

There are many other locomotion mechanics for VR (e.g. mixing teleportation and smooth movement, run in-place locomotion, re-orientation of the world and teleportation together, etc.) that we did not cover in this section. However, the most popular and widely used ones were briefly mentioned.

References

[1] C. Wienrich, CK. Weidner, C. Schatto, D. Obremski, JH. Israel. A Virtual Nose as a Rest-Frame-The Impact on Simulator Sickness and Game Experience. 2018, pp. 1-8

[2] J. T. Reason, I. J. Brand, Motion sickness, London: Academic, 1975.

[3] M. Treisman, Motion Sickness: An Evolutionary Hypothesis” Science, vol. 197, pp. 493-495, 1977.

[4] B. Lewis-Evans, Simulation Sickness and VR-What is it and what can developers and players do to reduce it?

[5] J. J. La Viola, "A Discussion of Cybersickness in Virtual Environments", ACM SIGCHI Bulletin, vol. 32, no. 1, pp. 47-56, 2000.

[6] G. E. Riccio, T. A. Stoffregen, "An ecological theory of motion sickness and postural instability", Ecological Psychology, vol. 3, pp. 195-240, 1991.